Anatomy and Generalities

The limbic system is an old brain structure that plays an important role in learning and memory functions. It is also involved in the generation, integration and control of emotions and their behavioral responses. For instance, the interpretation of facial expressions and the underlying emotional status of a person, or the evaluation of a dangerous situation and the decision to express an appropriate behavioral response (e.g. "fight or flight"), involve a variety of limbic brain regions, including the amygdala and prefrontal cortical regions. The limbic system is also tightly linked to the autonomic nervous system and, via the hypothalamus, regulates endocrine, cardiovascular and visceral functions. The amygdala, hippocampus and medial prefrontal cortex (PFC) critically influence the responses of the hypothalamic-pituitary-adrenal axis (HPAA).1 2 Furthermore, dysfunctions in these brain areas are implicated in the etiology of mental disorders, including depression and posttraumatic stress disorder, which are often characterized by HPAA abnormalities,3 and in attention deficit-hyperactivity disorder.2 4

The amygdala is connected to the PFC, hippocampus, septum and dorsomedial thalamus. Because of its connectivity within the limbic system, it plays an important role in the mediation and control of emotions, including love and affection, fear, aggression, and reward, and therefore is essential for social behavior and the survival of the individual and the survival of the species. For instance, damage to the amygdala reduces aggressive behavior and the experience of fear (i.e, makes one less fearful), whereas electrical stimulation of the amygdala has the opposite effect.1 2

The nucleus accumbens plays an important role in reward, pleasure, emotions, aggression and fear. The core and shell subregions of the nucleus accumbens receive inputs from a variety of limbic and prefrontal regions, including the amygdala and hippocampus, and make up a network involved in acquisition, encoding and retrieval of aversive learning and memory processes.5

The PFC can be roughly subdivided into: dorsolateral, medial (which may include the functionally related anterior cingulate cortex) and orbitofrontal cortex (OFC).6 Both the medial PFC and OFC are part of a frontostriatal circuit that has strong connections to the amygdala and other limbic regions.7 Thus, prefrontal regions are anatomically well suited to integrate cognitive and emotional functions.8

The OFC is involved in sensory integration and higher cognitive functions, including decision making. The OFC also plays an essential role in a variety of emotional functions, such as judgment of the hedonic aspects of rewards or reinforcers, which are important for the planning of behavioral responses associated with reward and punishment. Dysfunction or damage to the OFC can result in poor empathy and impaired social interactions. The OFC also plays a major role in the regulation of aggression and impulsivity, and damage to the OFC leads to behavioral disinhibition, such as compulsive gambling, drug use and violence.8

The cingulate cortex can be cytoarchitectonically and functionally differentiated into an anterior part, which exerts executive functions, and a posterior part, which is evaluative. Furthermore, the cingulate cortex has two major subdivisions: a dorsal cognitive division and a rostral-ventral affective division.9 A variety of studies indicate an anatomic and functional continuum rather than strictly segregated operations.9 10

The ventral and dorsal areas of the cingulate cortex play a role in autonomic and a variety of rational cognitive functions, such as reward anticipation, decision making, empathy, and emotional regulation 11 (Figure 1).

Reward and aversion

In 1954, James Olds and Peter Milner reported the results of what became a milestone in the research on reward mechanisms. They inserted electrodes into the brains of rats and then placed the rats in operant chambers equipped with a lever that, when depressed, would deliver current to the electrodes. Under these conditions, when an electrode was implanted in certain regions of the brain, notably the septal area, the rats would press the lever "to stimulate [themselves] in these places frequently and regularly for long periods of time if permitted to do so".12 Not only will animals work for food when they were hungry or for water when they were thirsty, but even when sated rats would work for electrical stimulation of their brains.12 13

The seminal work of Olds and Milner unleashed a barrage of research studies into the brain mechanisms of reward, most immediately, a spate of studies on electrical brain reward.13

The biological basis of mood-related states, such as reward and aversion, is not yet fully understood, albeit it is recognized as a functional neuronal network. Classical formulations of these states implicate the mesocorticolimbic system, comprising brain areas that include the nucleus accumbens (NAc), the ventral tegmental area (VTA) and the prefrontal cortex, implicated reward;14 17 and the amygdala (AMG), periaquaductal gray (PAG), and the locus coeruleus (LC), often implicated in aversion.14 18

However, the notion that certain brain areas narrowly and rigidly mediate reward or aversion are becoming archaic in regard with the development of sophisticated tools and methodologies that provide new evidence.17

The NAc, especially its outer region, called the shell, has been shown to play a necessary role in assigning motivational properties on rewards and to the stimuli that predict them. Thus, for example, in rat and mouse models, an intact NAc is required if the animal is to learn to work (e.g. lever pressing) to obtain natural rewards, such as palatable foods, or to learn how to self-administer drugs. Once an animal learns how to obtain a reward, and the relevant behaviors become ingrained, reward seeking no longer depends on the NAc and is supported by the dorsal striatum (the caudate and putamen in humans), the brain structure that underlies well-learned behaviors and habits.7 19

Under natural conditions, speed and efficiency in gaining food, water and shelter improve the probability of survival. Thus, a critical role of reward circuitry is to facilitate the rapid learning of cues that predict the proximity of reward and of the behaviors that maximize the chances of successfully obtaining it. Once learned, predictive cues automatically activate cognitive, physiologic and behavioral responses aimed at obtaining the predicted reward.20 21

VTA dopamine neurons are the primary source of dopamine (DA) in target structures such as the medial prefrontal cortex and the NAc, which play important roles in a broad range of motivating behaviors and neuropsychiatric disorders.22 24

Dopamine, released from VTA neurons in the NAc, plays the key role in binding rewards and reward associated cues to adaptive reward-seeking responses.25 26 In animals, implanted electrodes can record the firing of dopamine neurons; microdialysis catheters and electrochemical methods can be used to detect dopamine.27 In humans, positron emission tomography (PET) permits indirect measures of dopamine release by observing the displacement of a positron-emitting D2 dopamine receptor ligand previously bound to receptors following a stimulus or pharmacologic challenge. Using such methods in multiple paradigms, it has been well-established that natural rewards cause firing of neurons and dopamine release in the NAc and other forebrain regions. When dopamine action is blocked -whether by lesioning dopamine neurons, blocking post-synaptic dopamine receptors or inhibiting dopamine synthesis- rewards no longer motivate the behaviors necessary to obtain them.27 29

Much evidence suggests that the precise pattern of dopamine neuron firing, and the resulting synaptic release of dopamine in forebrain circuits, act to shape behavior so as to maximize future reward.19 30

In a basal state, dopamine neurons have a slow tonic pattern of firing. When a new, unexpected, or greater than expected reward is encountered, there is a phasic burst of firing of dopamine neurons causing a transient increase in synaptic dopamine. When a reward is predicted from known cues and is exactly as expected, there is no change from the tonic pattern of firing, that is, there is no additional dopamine release. When a predicted reward is omitted or less than expected, dopamine neurons pause their firing to levels below their tonic rate. Phasic increases in synaptic dopamine signify that the world is better than expected, facilitate learning of new predictive information and bind the newly learned predictive cues to action.20 21

Two distinct patterns of dopamine neural activity occur in the behaving animal. Midbrain dopamine neurons typically fire at low frequencies of 1-5 Hz, which are thought to produce a tone on the high-affinity dopamine D2 receptor in terminal regions of the mesolimbic dopamine system, including the NAc. In contrast, when animals are presented with motivationally salient stimuli, such as conditioned cues that predict drug availability, midbrain dopamine neurons fire in high frequency bursts (>20 Hz), thereby producing transient increases in NAc DA concentrations that are sufficiently to occupy low affinity DA D1 receptors.31 33

The pleasure-reward system

Reward involves multiple neuropsychological components: 1. the hedonic sensation of pleasure itself; 2. the motivation to obtain the reward (incentive component); and 3. the reward-related learning.34

Pleasure represents the subjective hedonistic value of rewards; it can indeed be either rewarding when it follows satisfaction, or incentive when it reinforces behaviors.35 36

Pleasure is not merely a sensation. Even a sensory pleasure such as a sweet taste requires the co-recruitment of additional specialized pleasure-generating neural circuitry to add the positive hedonic impact to the sweetness that elicits liking reactions.34 37

Different rewarding stimuli may elicit qualitatively and quantitatively different reward values. The pleasure or rewarding value associated with sexual intercourse may not only exceed in magnitude that associated with scratching an itch, but the two pleasures or rewarding values may themselves be in addition qualitatively distinct.13

There is evidence, in animals, of an increased dopaminergic activity in the VTA either from an unexpected reward or, after recognition of the reward characteristics, from the anticipation of the reward. Therefore, anticipation of a satisfaction activates neurochemical pleasure mechanisms and reinforces the obtaining behavior. In this way, pleasure contributes to an increased level of organism excitation.36

Endocannabinoid system

The endogenous cannabinoid system is a signaling system composed of cannabinoid receptors, endogenous ligands for these receptors and proteins involved in the formation and deactivation of these endogenous ligands.38 41

Two types of CB receptors have been identified. CB1 is highly expressed in the brain and mediate most of the psychoactive/central effects of cannabis10 42 44 Discovered later, CB2 are presented predominantly in the periphery and, at low levels, in some areas of the brain.43 47 In the dopaminergic mesolimbic system, the best known circuit involved in motivational processes, average to high concentrations of CB1 receptors are found in the terminal region, the striatum, whereas low concentrations of CB1 receptors are found in the origin, the ventral tegmental area (VTA).10 48

Neurobiology of addiction

All drugs of abuse increase dopamine concentrations in terminal regions of the mesolimbic dopamine system. Increases in NAc DA are theorized to mediate the primary positive reinforcing and rewarding properties of all known drugs of abuse.49 52

In addition, when animals are presented with conditioned stimuli that predict drug availability, transient dopamine events that are theorized to mediate the secondary reinforcing effects of drugs of abuse and initiate drug seeking are also observed in the NAc.31 53

Specifically, each drug mimics or enhances the actions of neurotransmitters at receptors for these neurotransmitters. Opioids are presumed to be habit-forming because of actions at opiate receptors, and so is nicotine because of the action at nicotinic acetylcholine receptors. Phencyclidine acts at N-methyl-D-aspartate (NMDA) and sigma receptors, and also blocks dopamine reuptake.5 54 Tetrahydrocannabinol (THC) binds to endocannabinoid receptors.55 56 Although amphetamines and cocaine do not act directly at dopamine receptors, they are reinforcing because they increase the concentration of dopamine at the dopamine receptors of the nucleus accumbens and frontal cortex.16

It has become clear that the acute administration of most drugs of abuse increases dopamine transmission in the basal ganglia,16 and that dopamine transmission in this brain region plays a crucial role in mediating the reinforcing effects of these drugs.57 60

The mesolimbic dopamine pathway is made up of dopaminergic cells in the VTA projecting into the NAc, located in the ventral striatum, and is considered crucial for drug reward.54 The mesostriatal (dopamine cells of the substantia nigra projecting to the dorsal striatum) and mesocortical (dopamine cells of the VTA projecting to the frontal cortex) pathways are also recognized in contributing to predicting drug reward (anticipation) and addiction.61 The time course of dopamine signaling is also a key factor, where the fastest time course predominantly has a role in reward and attributing value to predicted outcomes of behavior, while steady activation of dopamine release plays a role in providing an enabling effect on specific behavior-related systems.24 The mode of dopamine cell firing (phasic vs. tonic) also differently modulates the rewarding, conditioning effects of drugs (predominantly phasic) versus the changes in executive function that occur in addiction (predominantly tonic) 62 (Table 1).

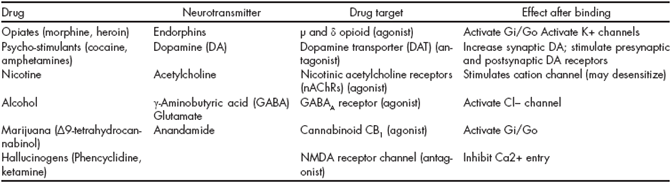

Table 1 Properties of addictive drugs

Adapted from Hyman SE, Malenka RC, Nestler EJ. Neural mechanisms of addiction: the role of reward-related learning and memory. Annu Rev Neurosci. 2006;29:565-598.56

The aversion system by the amygdala

Pavlovian fear conditioning is an associative learning task in which subjects are presented with a neutral conditioned stimulus (CS) paired with an innately aversive unconditioned stimulus (US).63

Pavlovian fear conditioning (and its aversive reinforcement) is severely impaired by disruption of the amygdala, indicating that the amygdala plays an important role in learning to respond defensively to stimuli that predict aversion.63 64

Fear is an intervening variable between sets of context-dependent stimuli and suites of behavioral response.

Unlike with reflexes, in the case of an emotion like fear, this link is much more flexible and the state can exist prior to and after the eliciting stimuli.65 66

Previous studies have shown that electrical stimulation of the amygdala evokes fear-associated responses such as cardiovascular changes,67 68 potentiated startle,69 and freezing.70

Contemporary fear models posit that the basolateral amygdalar (basal and lateral nuclei) complex is interconnected with the central nucleus (CeA), which is thought to be the main amygdaloid output structure sending efferent fibers to various autonomic and somatomotor centers involved in mediating specific fear responses.71

Neural activity in the amygdala appears to be required for expression of both conditioned and unconditioned responses to aversive stimuli, whereas synaptic plasticity appears to be required only for acquisition of conditioned responses, and not for expression of conditioned or unconditioned responses.63

After fear memory consolidation, which requires 4-6 h, fear memory becomes remarkably resistant to perturbation, giving way only to numerous unreinforced CS presentations, which lead to the extinction of learned fear responses. However, substantial remnants of the originally learned fear survive even after extensive extinction and causes the reappearance of fear-related behavior in a variety of circumstances, such as fear renewal and facilitated reacquisition.72

The lateral amygdala is essential in the acquisition and consolidation of auditory cued-fear conditioning. Thalamic and cortical auditory inputs to the LA are potentiated after fear conditioning, which are relayed to the basal and central amygdala so as to evoke aversive behavior.72

It seems that the fear-conditioning circuitry of the amygdala is functionally lateralized according to which side of the body (left or right) a predicted threat is anticipated on.73

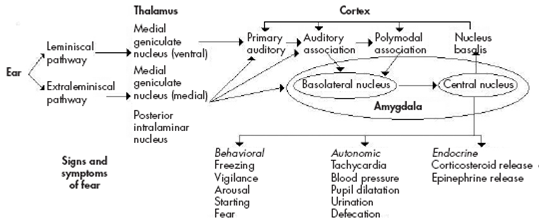

The LA neuronal population displayed increased average CS-evoked firing after conditioning, decreased responses after extinction, and potentiates responses after reconditioning, in tight correlation with the changes in the freezing responses 72 74 (Figure 2).

Adapted from: Heninger GR. Neuroscience, Molecular Medicine, and New Approaches to the Treatment of Depression and Anxiety. In: Waxman S, editor. From Neuroscience To Neurology. San Diego: Elsevier Academic Press; 2005. Chapter 12

Figure 2 Auditory conditioned fear pathways. Sensory information is transmitted to the thalamus via the leminiscal pathway involving the ventral medial geniculate nucleus, which projects only to the primary auditory cortex. Pathways thought to be involved in emotional learning involve extraleminiscal pathways, which project to the medial division of the medial geniculate nucleus, and the posterior intralaminar nucleus, which project to primary auditory cortex, auditory association cortex, and the basolateral nucleus of the amygdala, which also receives inputs via the auditory association and polymodal association cortex. The basolateral nucleus projects to the central nucleus of the amygdala, a major output of the amygdala, which projects to brain areas that produce the behavioral, autonomic, and endocrine manifestations of fear. The central nucleus also feeds back to the cortex via the nucleus basalis.

Conclusion

The reward and aversion systems are important mechanisms for individual and species survival (which is present in all mammalian animals and perhaps in all animals). The mesolimbic cortical dopaminergic system is the main substrate for the reward system, and the main neurotransmitter is dopamine, although serotonin, noradrenaline, endogenous opiates and cannabinoids, and perhaps other transmitters, also play a role.

The original function of the reward/aversion system is most likely the survival of the individual and the species, by promoting defense and/or pleasure mechanisms. However, the reward system is also important for sexual behavior, nursing, eating and drinking behavior. Potent psychoactive drugs stimulate in a non-natural way (massive stimuli) the reward/aversion system causing a disproportionate and unnatural response, and thereby inducing addiction and sensitization.

The aversive system may help the animal inducing a fight/flight behavior or avoiding some food. The amygdala is probably the main anatomical structure subserving aversive behavior, although the inhibition of the reward systems may also trigger aversive behavior.

Activation and sensitization (tachyphilaxia) of the aversive system is likely to be involved in anxiety disorders, panic attacks, post-traumatic stress disorders, phobias and abstinence syndromes

The reward/aversion system is closely related to memory, emotions and the autonomic and endocrine systems, and it is also related to cognitive functions through its connections to the prefrontal lobe. There is a heuristic value in considering the reward/aversion system as a functional unit. Both systems are crucial for survival and this common function should not be underestimated, particularly from an evolutionary viewpoint. Both systems share, albeit not all, anatomical structures and many neurotransmitters substances. It is perhaps an oversimplification to state that the withdrawal of one system leads to the overaction of the other but this hypothesis should be explored in the future in clinical and animal research.

nueva página del texto (beta)

nueva página del texto (beta)