1 Introduction

The knowledge of human limb motion has an important relevance in robotics when a robot is wanted to perform human-like behaviours. The robotic teleoperation is the action to control a robot at a distance.

One of the most important method to teleoperate robots is through haptic devices. These devices can be joysticks or special suits like gloves or full-body suits that are equip with inertial measurement unit (IMU) and are able to transfer force and positions to a robot in order to perform an specific motion [15, 14, 12, 9, 19, 10, 1, 16, 18].

The disadvantage of these methods is the sensors noise that can carry out low precision on the final motion of the robot. Other remarkable disadvantage is the suits are made for a fixed size and not all person can use them.

Another method is capture specific points of the body through artificial vision and artificial intelligence, afterwards use them as the set-points for the robot. The motion capture can be with an algorithm that search for a specific color in an image, for example a mark on person’s clothes.

Also, the augmented reality, that is the combination of real word images and information generated by a computer, in combination with haptic devices improves the teleoperation capabilities [8, 17, 11].

In Health Sciences, the robots teleoperation is applied to rehabilitation and therapy techniques such as therapies for children with autism [3, 5, 4]. In the present paper, a method to mimic the human limbs motions using three-dimensional kinematic model and a fuzzy logic control algorithm.

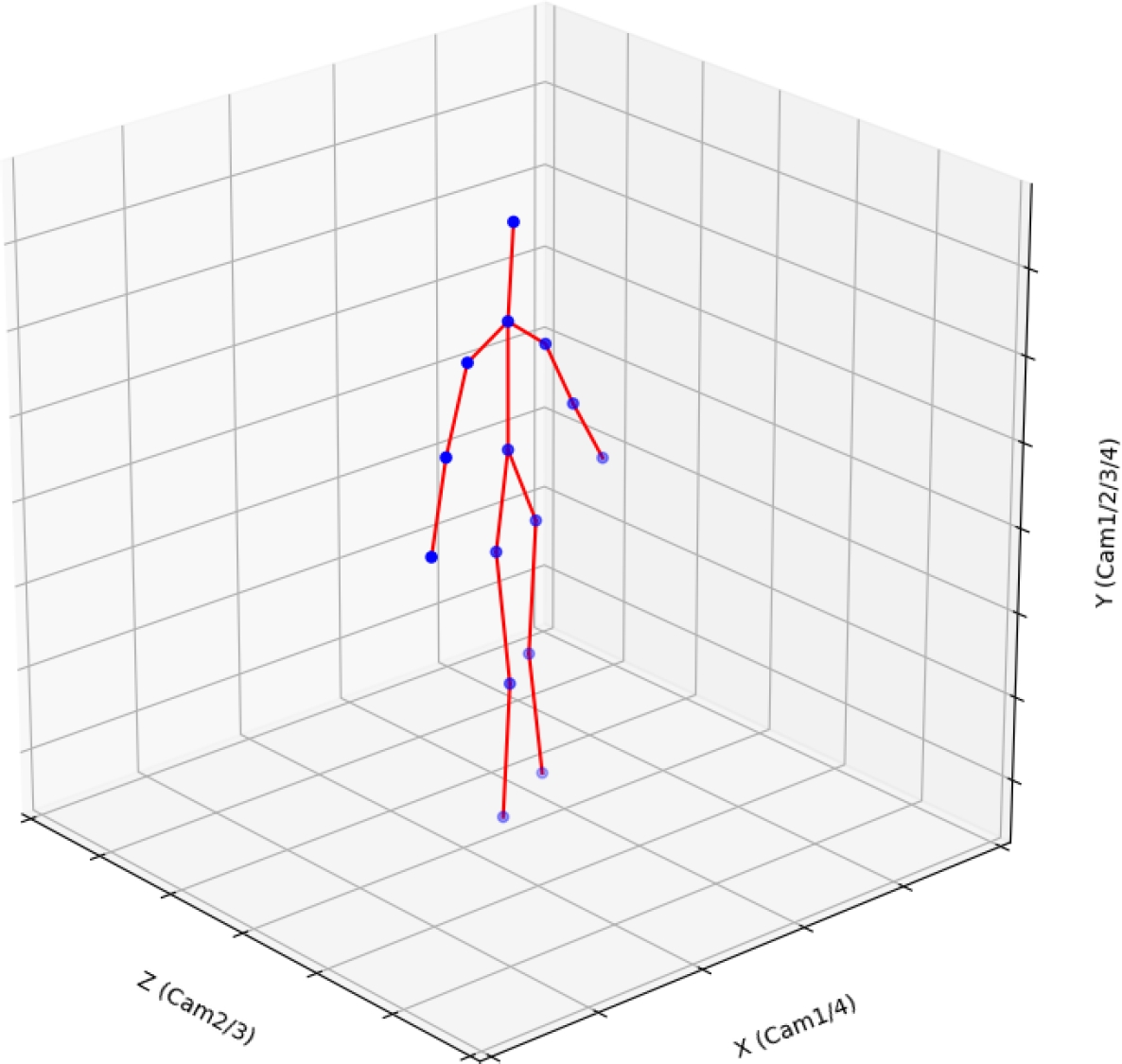

The kinematic model receives as input the points array from an artificial intelligence algorithm [2] which correspond to the locations of human joint, this array contains the points obtained by three-dimensional analysis of 4 plain images that are taken from 4 different points of view (front, back, right side, left side) instead of a 3-D approximation from a single point of view of a bi-dimensional image [20].

Then, the angles of limbs are obtained by three equations which are derived from kinematic model. These angles correspond to the motions that human limbs can perform on the different axis and are the set point of the fuzzy control algorithms of the robot. The fuzzy logic algorithms are Mamdani fuzzy inference systems with ”if, else” rules engine.

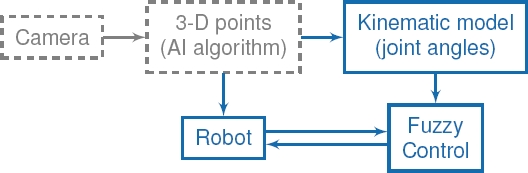

On the Fig. 1 is depicted a general flow diagram of this method. The artificial intelligence algorithm [2] obtains the information of four cameras equipped with two different sensors, RGB sensor and depth sensor.

The images captured by the 4 RGB sensors are processed with a Convolutional Neural Network which creates feature maps of input image and infers 2-D key points for person in the image. The 2-D key points are the locations of person’s joints. After that, the 3-D points are calculated making the relation between four set of 2-D points.

Two depth sensors work as security system that activate the RGB data acquisition till the person is in the middle of cameras array. This system ensures that person position in the images is similar in order to minimize error when the 3-D points are calculated.

The artificial intelligence algorithm [2] provides a robust method to detect the joint’s location. The measurements are not affected by the lighting changes and it was not necessary to use special equipment on the body, that is the main advantage of the algorithm.

2 Method

2.1 Kinematic Model

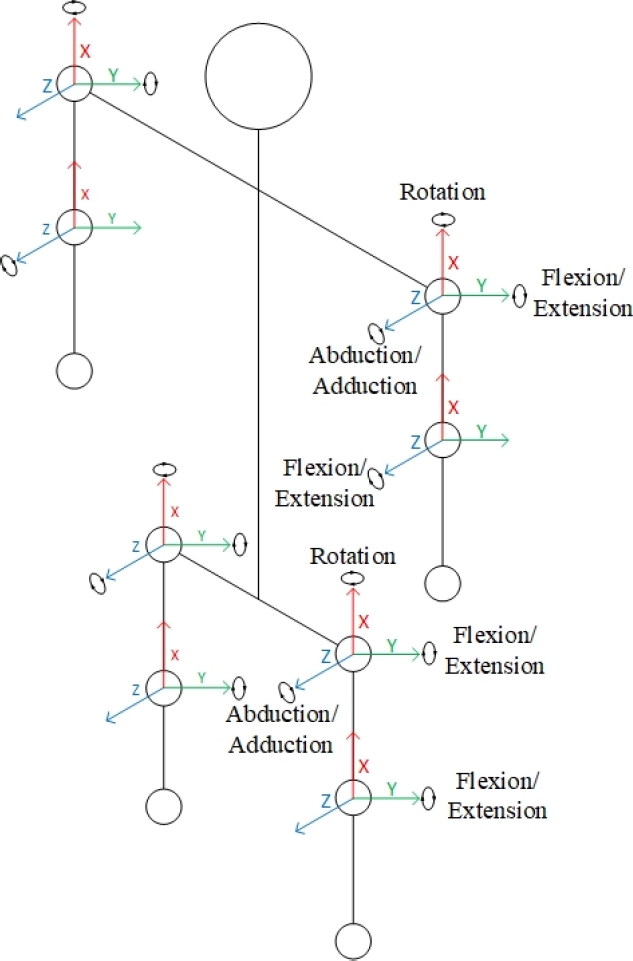

The kinematic model was based on the five basic motions that the NAO robot [6] and humans can perform [13, 7]. These movements are described below:

– Flexion. It is angle decrease at one joint.

– Extension. It is angle increase at one joint.

– Abduction. It is the motion of a limb away from the midline of the body.

– Adduction. It is the motion of a limb toward to the midline of the body.

– Rotation. It is the motion around a longitudinal axis of a bone, it can be internal or external.

The motions of upper limbs parts considered in this method were shoulders and elbow motions; the shoulders motions are Flexion / Extension

The elbows motions are Flexion / Extension

The hips motions are Flexion / Extension

The Roll, Yaw and Pith rotations matrixes were selected to describe the previous motions. The Equation 1 is the rotation matrix on the

The Equation 3 is the rotation matrix on the

where

Due the shoulders and hips are able to perform rotations on the 3 axis, the 3 previous equations were multiplied to obtain a single rotation matrix, equation 4:

where:

The artificial intelligence algorithm [2] is able to locate 15 key points. Three of these key points were used as reference point

The kinematic model for the upper and lower limbs is described by the following equations:

where the Equation 5 describes the final position of the right arm with the vector

A second translation from the point 2 to the point 3 creates the vector

Finally, the vector

This procedure was applied to the other limbs, where Equation 6 corresponds to the left arm, Equation 7 to the right leg and Equation 8 to the left leg.

In Tables 1 and 2 are shown the maximum angle that human limbs can perform before get injured. These angles are know as comfort angles [13, 7]. The vector value of each limb was calculated by the Equation 9:

where

Table 1 Comfort angles of right limbs

| Part | Movement | Axis | ID | Angle Range |

| shoulder | flex / ext | -150° to 40° | ||

| abd / add | -150° to 20° | |||

| rotation | -70° to 60° | |||

| elbow | flex / ext | 10° to 150° | ||

| hip | flex / ext | 130° to 15° | ||

| abd / add | -45° to 20° | |||

| rotation | 50° to 45° | |||

| knee | flex / ext | 0° to 155° |

Table 2 Comfort angles of left limbs

| Part | Movement | Axis | ID | Angle Range |

| shoulder | flex / ext | -150° to 40° | ||

| abd / add | -20° to 150° | |||

| rotation | -70° to 60° | |||

| elbow | flex / ext | -150° to 10° | ||

| hip | flex / ext | -130° to 15° | ||

| abd / add | -20° to 45° | |||

| rotation | -50° to 45° | |||

| knee | flex /ext | 0° to 155° |

To obtain each motion angle, the directional cosines were selected to estimate the rotation angles

The director cosine for the rotation angle at the

where

2.2 Control Algorithm

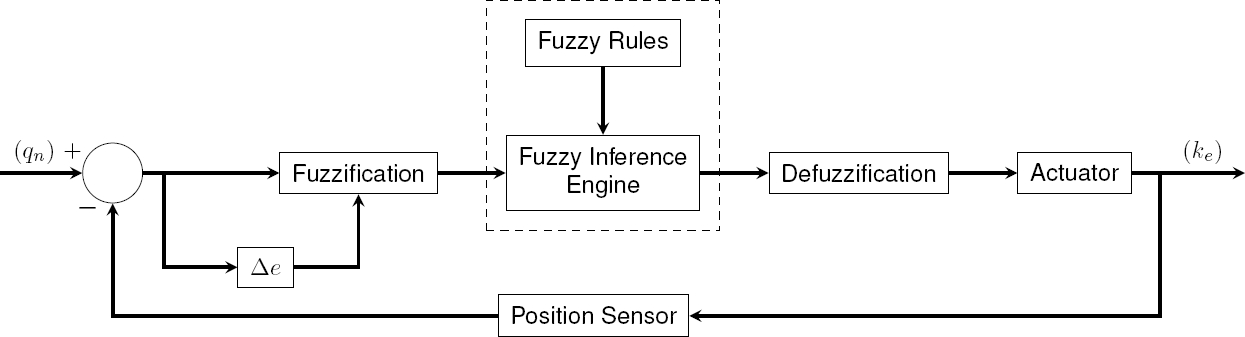

The control algorithm used to control the robot’s joint speed was a Fuzzy PD (Proportional Derivative) controller.

This controller is a Mamdani Fuzzy Inference System which is based on ”if-else” inference engine. For this application, the hip rotations were excluded due the NAO robot [6] is not able to perform this motion.

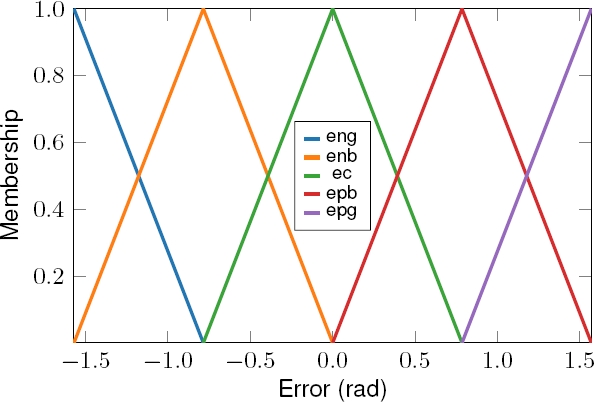

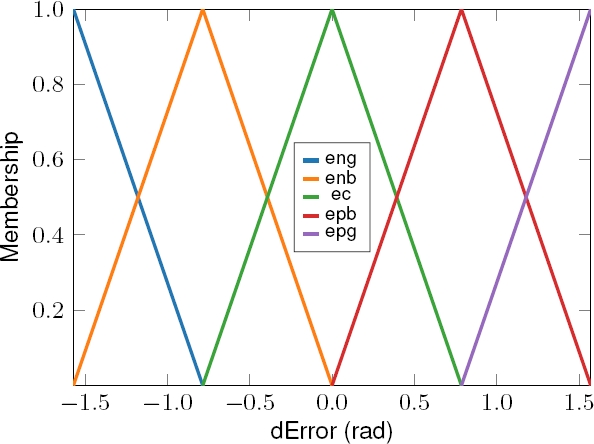

The controller’s set-points were bounded to the angles values in the Tables 1 and 2 in order to prevent a damage at the robot joints. In the first step, the controller, Fig. 7, assigned fuzzy values to error and differential error through input membership function.

The error was estimated subtracting the set-point and the angle measured by the sensors in robot’s joints at an instance of time

Then, the fuzzy values from the inputs were processed by an inference engine. Finally, the crisp values, that controls the robot’s joints, were estimated by the defuzzification block and sent them to robot’s actuators.

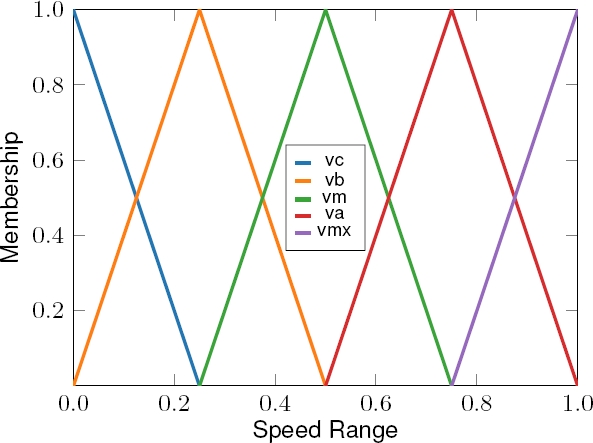

The error and the differential error were in radian and could be positive and negative, due this, five triangular memberships functions were assigned. For example, the membership functions from the right shoulder are shown on the Fig 4 and 5 .

Each motion angle have five triangular memberships functions for the error and other five for the differential error. The name assigned to the membership functions are the follow:

– eng. Big Negative Error.

– enb. Low Negative Error.

– ec. Zero Error.

– epg. Big Positive Error.

– epb. Low Positive Error.

The robot’s speed motion was controlled with values from 0 to 1, which 0 is zero speed and 1 is the maximum speed. Five triangular membership functions were defined in this range.

On the Fig 6 is shown an example from the right shoulder. Similarly to the input, each motion angle has five output membership functions. The name assigned to the membership functions are the follow:

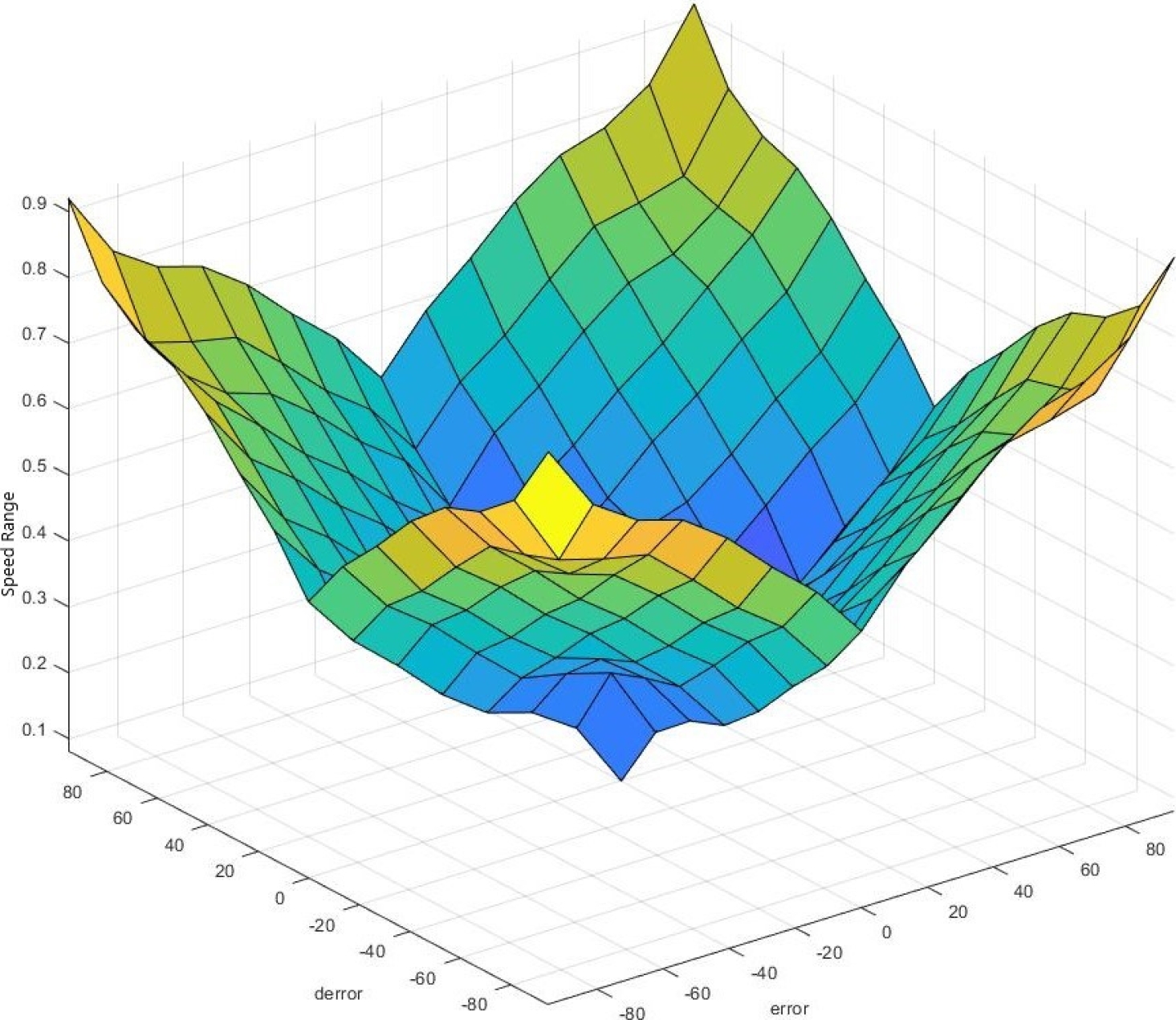

The inference engine was defined with the rules shown in the Table 3. The fuzzy rules were designed to smoothly decrease the speed when the joint approaches to the set point. These behaviour is shown on the control surface in Fig. 8.

Table 3 Fuzzy inference rules

|

e / |

eng | enb | ec | epb | epg |

| eng | vmx | va | vm | va | vmx |

| enb | va | vm | vb | vm | va |

| ec | vm | vb | vc | vb | vm |

| epb | va | vm | vb | vm | va |

| epg | vmx | va | vm | va | vmx |

Finally, the crisp value that was sent to the robot was estimated with Center of Gravity (CoG) method. This defuzzification method is shown in the Equation 13:

where

3 Results

The tests were divided into two: stable posture tests and unstable posture test. When the body’s center of mass is located at the support base is called as stable posture.

The human is able to perform this kind of posture without loss the balance. If the body’s center of mass is not located at the support base, then it is an unstable posture. For this test, the unstable posture was divided into free unstable posture and intervened unstable posture.

When a person lifts the robot in order to avoid the feet touch the ground is an intervened unstable posture, if the robot’s feet touch the ground is a free unstable posture. The errors were estimated subtracting the final angle for each joint of the human limbs and robot limbs. These angles are shown in the Tables 1 and 2:

The Equation 14 is the Relative Angle Error

On the fists test, the person performed an stable posture. The person only extended his arms and did not move the legs. The Table 4 contains the errors from each limb’s angle, in this Table P-R is refereed to Person-Robot.

Table 4 First stable posture errors

| Part | Right ID | P-R Error (%) | Left ID | P-R Error (%) |

| shoulder | 17.3301 | 12.9054 | ||

| 7.4301 | 53.0586 | |||

| 5.9549 | 19.4928 | |||

| elbow | 8.4112 | 11.8060 | ||

| hip | 22.4334 | 14.4368 | ||

| 19.2864 | 42.8673 | |||

| knee | 8.3355 | 18.6414 |

In the Fig. 9, Fig. 10 and Fig. 11 are show examples of the approximated location of human’s key points, the skeleton created by the artificial intelligence algorithm [2] and the final robot’s posture, respectively.

The second test was a stable posture too. But in this case, the person extended his arms completely in order to perform a “T” posture, the legs remained at the same position of the previous posture.

The Table 5 contains the errors from each limb’s angle, in this Table P-R is refereed to Person-Robot. On the third test, the person raised one leg and extend the arms slightly and the robot stayed on the ground because it was a free unstable posture.

Table 5 Second stable posture errors

| Part | Right ID | P-R Error (%) | Left ID | P-R Error (%) |

| shoulder | 15.3593 | 3.6016 | ||

| 13.1042 | 16.9010 | |||

| 4.2484 | 1.9413 | |||

| elbow | 9.4681 | 13.2249 | ||

| hip | 32.2980 | 19.3410 | ||

| 33.6234 | 26.9505 | |||

| knee | 4.5920 | 16.5909 |

The robot’s anti-fall safety system located at the at the feet was activated automatically lowering the legs to the ground in order to avoid a fall. The Table 6 contains the errors from each limb’s angle, in this Table P-R is refereed to Person-Robot.

Table 6 Unstable posture errors

| Part | Right ID | P-R Error (%) | Left ID | P-R Error (%) |

| shoulder | 22.5545 | 31.8723 | ||

| 15.2686 | 53.1763 | |||

| 25.7007 | 21.2021 | |||

| elbow | 17.3055 | 63.7488 | ||

| hip | 27.9923 | 56.8126 | ||

| 44.0163 | 96.9256 | |||

| knee | 30.0295 | 98.2249 |

The fourth test was an intervened unstable posture, hence a second person lifted the robot in order prevent the activation of robot’s anti-fall safety system.

The first person raised one leg, flexed one arm and raised the same arm in order to locate the wrist near to the chest. The Table 7 contains the errors from each limb’s angle, in this Table P-R is refereed to Person-Robot.

Table 7 First intervened unstable posture errors

| Part | Right ID | P-R Error (%) | Left ID | P-R Error (%) |

| shoulder | 7.5838 | 37.0683 | ||

| 30.6896 | 16.7383 | |||

| 9.9927 | 30.1306 | |||

| elbow | 24.8028 | 6.7421 | ||

| hip | 8.1788 | 14.6641 | ||

| 8.2292 | 21.3018 | |||

| knee | 88.5699 | 19.5520 |

On the final test the fist person raised one leg and both arms and the robot stayed lifted by the other person in order to perform an intervened unstable posture. The Table 8 contains the errors from each limb’s angle, in this Table P-R is refereed to Person-Robot.

Table 8 Second Intervened unstable posture errors

| Part | Right ID | P-R Error (%) | Left ID | P-R Error (%) |

| shoulder | 13.1688 | 12.4269 | ||

| 26.2602 | 22.1812 | |||

| 16.9042 | 2.5673 | |||

| elbow | 12.7722 | 10.7212 | ||

| hip | 11.5340 | 1.6981 | ||

| 6.2620 | 9.1534 | |||

| knee | 68.4082 | 28.0653 |

Finally the Average Relative Errors for each test was calculated and shown in the Table 9.

Table 9 Relative Errors for Real Posture – NAO Robot

| Test | Average Relative Errors Person Robot (%) |

| Test 1 | 18.74 |

| Test 2 | 15.08 |

| Test 3 | 43.20 |

| Test 4 | 23.16 |

| Test 5 | 17.43 |

Only the Average Relative Errors of the arms was used to compared with the results of the state of the art, due the total error of the posture was not included as a result on the referenced paper. Both results are shown on the Table 10.

Table 10 Results of the state of the art

| Test | Arms average errors (%) |

| Method [20] | 18 |

| Proposed method | 19 |

4 Conclusion and Future Work

With the detailed information of limbs motions provided by the kinematic model was possible to estimate the joint angles without complex equations. These angles were used as control system’s set points that controls the joint’s motion speed.

The maximum and minimum joint’s angles from human and robots are slightly different, due the maximum angles that the robot can perform were lower than the angles that a human can perform.

Even with this difference, the final posture errors resulted only 1% different than the result obtained in a similar work of the state of the art. The method proposed on the state of art [20] used a standard library to locate the human joints based on the information of the RGB sensor and the depth sensor.

The 3-D location of the joints were estimated only with one plane image. This is the main disadvantage of the method due the algorithm cannot estimate the 3-D position of a joint if the depth sensor cannot measure the complete body of the person, for example, if the RGB sensor and the depth sensor can only measure the left side of the human body, the 3-D location of the joint and the teleoperation cannot be performed due the information of the missing parts cannot be inferred.

Additionally, the OpenNI library requires a special illumination because the location of the joints cannot be estimated if the RGB image does not have a specific range of colors.

The proposed method in this paper, the 3-D analysis of the person posture was made with the information of 4 plain images from different points of view. Hence, the 3-D location of the joints are more reliable than the method where the 3-D location is estimates using only one image.

Furthermore, the limitation which the person’s joints must be located in front of 2 sensor was eliminated. The artificial intelligence algorithm [2] provided the joints location no matter where the person was located on the range of the 4 cameras. The measurements were not affected by the lighting changes due the artificial intelligence algorithm was trained with different kind of images, those images included bright and dark images. The robot’s final posture from Test 3 was affected by the anti-fall safety system programmed by the manufacturer. This can be seen in the error values from the hip and knee.

Thank of the human intervention, the robot could perform a complex posture avoiding the anti-fall safety system activation getting better errors values of the hip and knee. The human body can keep the balance naturally and unconsciously, but the robot cannot do it. That it is the main reason why the manufacturer included the anti-fall safety system.

The fuzzy control algorithm had a favourable performance moving smoothly the robot’s limbs to the set-points. The joints speed was decreased when the angle measured by the joint sensor got close to the set-point.

The fuzzy membership functions were correctly set due the maximum operation range and the speed configuration of the NAO limbs were knew that are included in the technical documentation of the manufacturer.

The future research will be focused on test and develop new deep learning models and fuzzy control algorithms to improve the measurement of human limbs and motion control, also the hands and finger motion will be included on the future 3-D models. The main application to focus will be the medicine and mining.

For medicine, the application to focus will be the surgeon robotics. Nowadays, the surgeon robots are teleoperated through haptic devices and specialist surgeons require many training hours to properly operate it. The motion capture through computer vision to teleoperate a robot will reduce the training time, due the surgeons will need only move their hands as they normally do at surgeon procedure, the haptic devices are not going to be required anymore.

For mining, the research will be focus to eliminate the risk to get injured or dead at work or explore a mine. The labor will teleoperate a robot from a safe distance, the robot in the mine will mimic the labor’s movements. If the mine collapse, explode or has a water leak, the human life will be safe and the only lost will be the robot.

nueva página del texto (beta)

nueva página del texto (beta)