1 Introduction

Electricity is important for the economic development of nations; and one of the main challenges today, in the electricity sector, is to provide reliable and cost-effective services [1,2]. The Electrical Union (UNE, Spanish acronym) in Cuba develops the Business Management System of the Electrical Union (SIGE) that focuses on the automation of electrical processes [3]. SIGE is composed of two main subsystems: The Integral System of Network Management (SIGERE) and the Integral Management System of the Electrical Industry Construction Enterprise (SIGECIE).

The functions of SIGERE and SIGECIE are to collect technical, economic and management data to convert them into information. The data collected facilitate and improve the efficiency in the operation, use, analysis, planning and management of the electricity distribution and transmission networks. The two systems constitute the databases of a Geographic Information System (GIS) that forms part of the SIGE.

SIGERE and SIGECIE are considered complex systems that have 36 modules in use, and a database of 716 tables, 1303 stored procedures and 74 functions. In addition, other functionalities are in development phase. An average action in the system involves approximately nine tables that have different attributes.

To carry out a query on a specific topic requires knowledge of the database organization. Despite the number of queries stored, they still do not cover the needs of the customer due to the operational dynamics of a national electro-energy system.

To solve this problem, an analysis of the literature is carried out and a group of experts on the subject is gathered, who detect the following limitations:

— Rudimentary methods of n elaboration.

— Functional relationships of the electrical system elements are not described in the database.

— Lack of important concepts for the electrical system in the data base.

As a first step in solving these problems, a lightweight ontology is provided to the system to give a conceptual basis. In the conceptualization we have the concepts, their taxonomy and relations (objects properties); the remaining components of the ontology model (data properties, instances and axioms) are not developed, because the information is already in the database that feeds the system.

A weakness of the systems proposed is in the dissatisfaction with the queries carried out. If a static inquiry is developed for each problem that arises, the database begins to store a group of scarcely-used queries. In order to solve the problem, the system must be able to generate intelligent queries in real time, in which the knowledge obtained from previous ones is used. Hence, the objective of the research is: to develop intelligent queries in real time, based on existing knowledge that facilitate decision making in the electrical energy transmission and distribution processes.

2 Analysis of the Methodological Basis

2.1 Geographic Information System

Spatial analysis technologies play an important role in the planning, monitoring and management of electricity networks [4]. One of the most important problems detected in the different investigations carried out on geographic information systems are those derived from the heterogeneity and interoperability of the data [5]. For its solution, an increasingly dominant strategy, in the organization of information, is associated with the term "ontology" [6]. A spatial ontology is one that takes nodes that correspond to objects that occupy space [7]. Spatial ontology serves as an intermediate layer and allows the discovery of hidden knowledge, such as spatial arrangements [8].

In 2013 propose an approach of semantic integration of geospatial data at a low level of abstraction with the use of the Ontology of Representation of Data (ORD) [9]. Tolaba, et al. in 2014 [10], proposes the geospatial meta-ontology, a 5-tuple Meta-ontology = {C, R, A, X, I} formed by a set of concepts, relationships, attributes, axioms, rules and instances.

Additionally, the current trend in the integration of geospatial information makes use of semantics as a fundamental element. In 2018 Jelokhani Niarakia, Sadeghi Niarakibc and Choic [11] realize study how is carried out to integrate environmental ontologies, GIS and multicriterio decision analysis. There are several studies where the concepts of: modeled construction information (BIM) and modeling of city information are integrated [12-15]. Other works focus on the development of smart cities based on knowledge integration, such as the FP7 DIMMER European project [16] Within the electricity sector some GIS are analyzed, highlighting among them can view in table 1.

Table 1 GIS in electrical sector

| GIS | Includes Semantic | Areas |

|---|---|---|

| GIS based on CityGML with 3D representation [17] | Includes | Focuses on the 3D representation of sunlight |

| NCRM [18] | Not Included | Focuses on the study of electrical demand |

| PADEE 2016. Intelligent Plans Program [19] | Not Included | Covers distribution and transmission. |

| WindMilMap de Milsoft Utility Solutions [20] | Not Included | Covers distribution and transmission. |

| GIS on solar radiation in the province of Manabí, Ecuador [21] | Not Included | Cover renewable energy sources. |

The GIS designed for electricity companies cover a wide spectrum of information and tend to specialize in a specific area, renewable energy sources, loss studies, commercial, among others.

The GIS used in energy distribution companies cover a wide spectrum of information and specialize in a specific area; but the use of semantic elements is limited and doesn't include automatic queries.

With the development of ontologies, the GIS can be endowed with a conceptual basis, but it has not yet been possible to strengthen the decision-making process with automatic consultations. If all static queries required to respond to user requests are stored, the size of the database grows exponentially. The problem is how to achieve intelligent queries from stored queries where the domain is not completely represented.

2.2 Case-Based System

Artificial Intelligence (AI) is the branch of computer science that attempts to reproduce the processes of human intelligence through the use of computers [22]. Within AI, the Expert Systems (ES) or Knowledge Based Systems (KBS) emerged in the 1970s as a field that deals with the study of: the knowledge acquisition, its representation and the generation of inferences about that knowledge. There are different variants for building the KBS based on the representation of knowledge and the method of inference that is being implemented. Among the systems are: Rule Based Systems, Probability Based Systems, Expert Networks, Case-Based Systems or Case-Based Reasoning Systems (CBR), among others.

In this sense, CBRs appear as a palliative to the process of knowledge engineering and are based on the premise that similar previous problems will have similar solutions [23]. With this principle as the basis, the solution to a problem is retrieved from a memory of solved examples. For each case, the most similar previous experiences that allow finding the new solutions are taken into account [24,25].

The CBRs need a collection of experiences, called cases, stored in a case database, where each case is usually composed of the description of the problem and the solution applied [26]. Case-based reasoning contributes to progressive learning, so that the domain does not need to be fully represented, [27,28].

The CBRs have three main components: a user interface, a knowledge base and an inference engine [29,30].

2.2.1 Case Database

A case contains useful information in a specific context, the problem is to identify the attributes that characterize the context and to detect when two contexts are similar. Kolodner defines that "a case is a contextualized piece of knowledge that represents an experience" and that is described by the values that are assigned to predictive and objective traits [31].

To provide the system with a conceptual basis the traits can be organized through ontology. The fundamental role of ontology is to structure and retrieve knowledge, to promote its exchange, and to promote communication [32-34]. In addition, relying solely on CBR for distributed and complex applications can lead to systems being ineffective in knowledge acquisition and indexing [35].

According to Bouhana, et al. [36] use of ontologies in case-based reasoning gives the following benefits:

2.2.2 Inference Engine

The CBR is a cycle so-called 4R that has the following stages [37]:

— Given a new problem to solve, it is sent to the Recovery module, which carries out a search in the CDB and recovers (Retrieves) similar problems or cases.

— Similar problems or cases are sent to the Adapter module for the optimal solution to the problem, reusing (Reuse) the proposals of the recovered cases.

— Once the solution is found, if necessary, the proposal is reviewed (Revise) and stored Retain), together with the description of the problem, in the case database. The defined proposal constitutes a new case.

2.2.3 Recovery Module

This stage is important within the CBR cycle because if the case recovered is not adequate, the problem cannot be solved correctly and the system leads to committing a mistake. For this we must start by identifying which would be the most similar case and then establish the similarity between cases [38].

Success in estimating similarity determines the efficacy of a KBS. Several authors note that this point is the most difficult when implementing CBRs [39]. The selection of a suitable distance function is fundamental for a good performance of any of the classification algorithms based on instances [40].

For selecting a distance function there are several approaches consulted in the literature. On one hand, establishing the differences between objects, phenomena or events is proposed and therefore their dissimilarity. Only when differences have been observed and characterized, similarity begins to play a role. Therefore, it's concluded that dissimilarity is more important than similarity, and the development of their theories is focused on the basis of this concept [41]. In contrast to the aforementioned authors, there are many practical examples in which analogy, likeness or similarity provide the basic information for establishing recognition [42].

The value assigned to an evaluation attribute to indicate its relative importance with respect to the other attributes is called weight. The weight of an attribute can be obtained by the criteria of experts increasing or decreasing the range of attributes, or by a method that learns from the data themselves.

The greater the weight of an attribute, the greater its importance. In the case of n attributes, a set of weights is defined by:

There are different procedures for weighing attributes, such as: proportion, paired comparisons, Analytic Hierarchy Process and SMART. These methods vary in their degree of difficulty, theoretical assumptions and stringency. The analysis carried out allows establishing the criterion of experts for this research, giving a greater weight to the ontological traits.

The traits in a case are almost always of different types. There are previous studies that analyze how to find the optimal measure of similarity in these cases [43]. An important issue is deciding the algorithm to maintain a balance between precision and efficiency; it's necessary to provide good classification performance within a reasonable response time [44].

The rule of the nearest k-neighbors allows modeling problems with object descriptions in mixed and incomplete data, and it's an attractive tool for practical use [42]. The Recoverer retrieves the k cases closest to the query requested by the user, using the smallest values that determine the global dissimilarity.

The set of k cases retrieved is the input of the Adapter module. Below, the measurements are analyzed for each type of traits.

For evaluation of the effectiveness of character-based similarity measures, a cross validation is used: Leave One Out Cross Validation (LOOCV) as shown in table 3. This method involves separating the data so that for each iteration there is a single test datum and the rest of the data for training, giving the best Jaro Winkler results.

Table 3 Result of Leave One Out Cross Validation

| Cases classified well using |

Affine gap | Levenshtein | Q-gram N=2 |

Jaro | Jaro-Winkler |

|---|---|---|---|---|---|

| (1) | 29 | 32 | 29 | 47 | 54 |

| (2) | 32 | 35 | 32 | 116 | 121 |

| (3) | 90 | 94 | 96 | 95 | 99 |

| (4) | 160 | 164 | 163 | 166 | 172 |

| (1) Only with the ontology trait and without adaptation. | |||||

| (2) Only with the ontology trait and with initial adaptation. | |||||

| (3) All traits giving greater importance to the ontology trait and without adaptation. | |||||

| (4) All traits giving greater importance to the ontology trait and with initial adaptation. | |||||

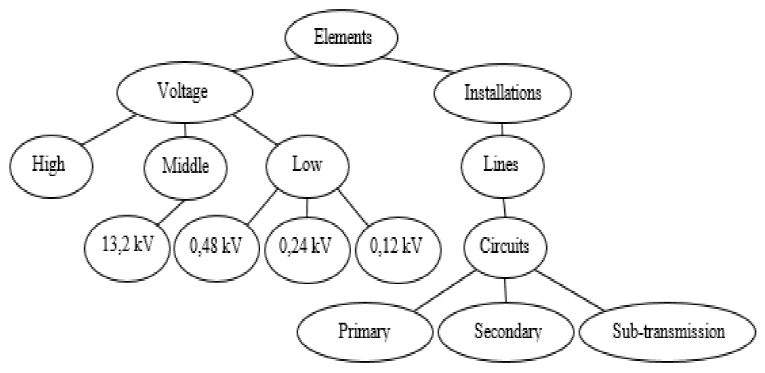

On the other hand, to evaluate the results of the position-based similarity measures, a fragment of the ontology should be taken (figure 1) and applied the similarity measures analyzed. The results are shown in table 4.

Table 4 Values of similarity measures

| fconst | fdeep | cosine | |

|---|---|---|---|

| S(13.2 kV, 0.48 kV) | c | ½ | ⅔ |

| S(0.48 kV, 0.24 kV) | c | ¾ | 1 |

| S(primary, secondary) | c | 1 | 1 |

| S(13.2 kV, primary) | c | 0 |

|

As the ontology becomes deeper the element to be consulted fulfills requirements that can be flexible to the user.

2.2.4 Distance Functions Based on Ontologies

The distance functions based on ontologies allow comparison of two ontologies and finding similarity between them (or between parts of them). Two levels can be considered in these distance functions:

— Lexical level: how terms are used to convey meanings.

— Conceptual level: conceptual relationships between the terms (position-based and semantic similarity functions)

Currently there are various proposals for functions to find a measure of similarity that are classified into: those based on characters and those based on full words or tokens.

The first considers each string as a continuous sequence of characters; the second as a set of substrings limited by special characters such as: blank spaces, commas and points; and the distance between each pair of tokens is calculate by some character-based function. The main functions consulted are described below in the table 2, decreases as the tree is deepened. If an element separates into the first or second branch it becomes totally different.

Table 2 Distance functions based on ontologies

| Name | Description | |

|---|---|---|

| • Character-based distance functions | ||

| Affine gap distance [45] | Offers a solution by penalizing the

insertion/elimination of consecutive k characters (gap) at

low cost, through a related function. |

|

| P(k)=g + h + (k - 1) g: cost of starting a gap, h: cost of extending one character, and h<<g |

(2) | |

| Smith-Waterman algorithm [46] | The maximum distance between a pair

(A', B') of all those possible, such that A' is substring of

A and B' is z substring of B. |

|

|

|

|

(3) |

| Levenshtein distance [47] | The distance between two strings of

text, A and B, is based on the minimum set of editing

operations required to transform A into B (or vice versa). The editing operations allowed are: deletion, insertion and substitution of a character. |

|

|

|

|

(4) |

| Jaro distance [48] | Jaro distance develops a distance

function that defines the transposition of two characters as

the only editing operation allowed |

|

|

|

(5) | |

| Jaro-Winkler [49] | Proposes a variant that assigns

greater distance scores to strings that share some prefix, a

model based on Gaussian distributions. The Jaro distance can be calculated in O(n). |

|

| Q-gram distance [50]: | Q-gram distance, also called

n-gram, is a substring of q length. The principle behind

this distance function is that, when two strings are very distant, they have many q-grams in common. |

|

| • Position-based similarity functions | ||

| Constant similarity function [51] | Constant similarity function

(fconst) proposes a similarity value increasing with the

depth of a node in the hierarchy. |

|

|

|

(6) | |

| Basic depth (fdeep) | This function calculates the values

of similarity, instead of manually recording them, based on

the existing relationship between the depth of the most specific concept of all those that contain the two elements compared and the maximum depth of the hierarchy. |

|

|

CN: is the set of concepts of the current knowledge base. LCS(I1, I2): is the set of more specific concepts (existing in the knowledge base) that contain the two elements compared. Prof(Ci) depth of concept Ci calculated as 1+ the number of subsumption links from the TOP (base element) to the Ci concept. |

(7) | |

| Cosine similarity function (Cosine) [52] | Cosine similarity function is based

on representing each concept by a property vector and

calculating the similarity between two concepts as the result of applying a certain function to the vectors that represent it. If the similarity between two concepts, Ci and Cj, is defined as the cosine of the angle representing them, Vi and Vj, then the similarity will be given by expression 8. |

|

|

|

(8) | |

| • Semantic similarity functions | ||

| Resnik algorithm [53] | This algorithm is one of the most

important for calculating semantic similarity, which

proposes that the similarity between two concepts of a taxonomic structure, c1 and c2, can be obtained by equation 9 [54]. |

|

|

where: S (c1, c2): set of concepts from which both c1 and c2 come. P (c) is the probability for the c concept |

(9) | |

2.2.5 Distance Functions for Single-Valued Features

The single-valued traits use a Boolean comparison criterion represented by equation 10. The result is 1 if the value xi is different from yi; or 0 if the opposite is true [55]:

2.2.6. Distance Functions for Set-Type Traits

There are different problems where traits appear that take different values simultaneously, which could be represented in a natural way by sets.

Table 5 shows distances adapted to set-type data. Work has been carried out to extend algorithms of automatic learning on this type of data because they favor modeling the most natural problem [56].

Table 5 Functions of local distance for set-type data

| Jaccard: | |

|

|

(10) |

| Czkanowsky-Dice: | |

|

|

(11) |

| Sokal-Sneath: | |

|

|

(12) |

| Cosine: | |

|

|

(13) |

| Braun-Blanquet: | |

|

|

(14) |

| Simpson: | |

|

|

(15) |

| Kulczynski: | |

|

|

(16) |

In case the two sets are empty, the distance functions become undefined, so they take zero value. The temporal complexity of these distance functions belongs to an order of O (n), where n is equal to the number of elements of the attribute domain.

3. Design of the Application

3.1 Case-Based Approach for Real-Time Inquiries of the UNE.

For the present research a lightweight ontology is developed, where the conceptualization only has: concepts, their taxonomy and relations (object properties); the remaining components of the ontology model (data properties, instances and axioms) are not developed because they are in the SIGERE and SIGECIE databases.

The ontology was carried out using the Methontology methodology and the Protégé tool [57]. The development of the CBR is carried out based on an analysis of the existing inquiries and of the database that allows defining a case database and an inference engine that are described next.

3.1.1 Cases Database

An analysis of the inquiries carried out, including those for SIGERE, allows establishing the structure of a case to solve the problem, which is divided into predictive traits and objective traits T∩TPot∩TMonofasic⌐SSecondary. (Figure 2). For a better understanding of the proposed structure, in the table 5 identifies the universe of discourse of the predictive and objective traits.

Table 5 Universe of discourse of predictive and objective traits

| Trait | Possible values | Type |

|---|---|---|

| Predictive traits | ||

| NV | Secondary, Primary, Subtransmission, Transmission | Symbolic and single-valued |

| EB | Posts, Transformer banks, Capacitor banks,

Generator groups, Disconnectors, Structures, Lamps, Transmission Circuit Subtransmission Circuit, Primary Circuit, Secondary Circuit, Lighting Circuit, Distribution Substation, Transmission Substation |

Symbolic and single-valued |

| AT | Attributes to be returned by the inquiry (code, voltage, name, etc …) | Set |

| Tables | Tables of the SIGERE involved in the inquiry

(Accessories, Actions, Connection, Interruptions, Line, CurrentSupplyPrimary, etc …). |

Set |

| CA | Element to compare (Attribute being compared) | Symbolic and single-valued |

| ON | Operator (∪, ∩, ≤, ≥, =, like, etc) | Symbolic and single-valued |

| OP | Ontology (descriptive logic) T ∩ TP ∩ TMonophase ¬ SSecondary | Ontology |

| OG | Spatial constraint (descriptive logic) | Ontology |

| Objective traits | ||

| From | Returns the From of the inquiry to the SIGERE | String |

| Where | Returns the Where of the inquiry to the SIGERE | String |

| CE | Returns the GIS inquiry | String |

The ontological traits ON and OG, represented by descriptive logics, have the greatest weight within the case. A possible value of the ON trait would be This range expresses that the element is a monophasic primary transformer with no secondary output. OG works similarly, but their relationship is spatial, an example that refers to the location of an element would be: P∩Prov∩Muncp.

This example expresses that an element belongs to the country (P), to a province (Prov) and to a municipality (Muncp).

As the ontology becomes deeper the element to be consulted fulfills requirements that can be flexible to the user. For one element to be similar to another it does not need to be at the same level in the ontology, but it must have gone through the same branch. The degree of importance of each level decreases as one goes deeper in the tree.

It is necessary to define the information of each case and its representation, to facilitate access and recovery of the cases in the case database and to establish their organization [58,59].

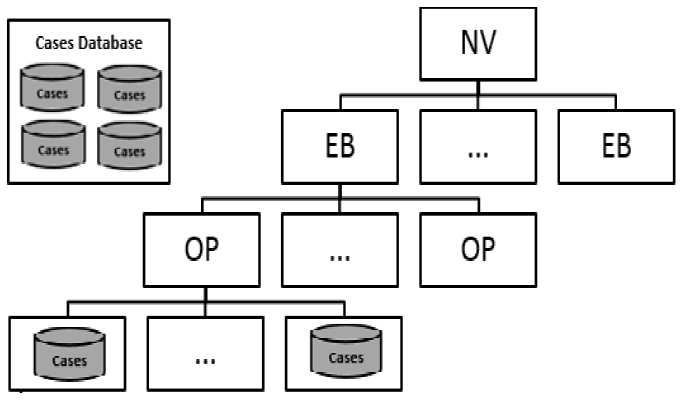

In this sense, an analysis of the different ways of organizing a case database is performed and the use of a hierarchical structure is proposed because it favors the recovery of the most similar examples to the inquiry in time.

In the figure 3 shows the organization of the case database where:

— the predictor trait NV is the root node;

— in the second and third level the EB and OP traits are added, respectively, since they are the most discriminative elements;

— in the leaf nodes, sub-sets of cases that represent those examples where the value of NV, EB, OP match.

3.1.2 Inference Engine

The inference engine is the system's reasoning machine, which compares the inserted problem with those stored in the case database, as a result it infers a response with the highest degree of similarity that is sought, adapting the most similar cases recovered. Below is the explanation of how each part of the cycle works in the system proposed.

Recovery

The recovery module is responsible for extracting from the case database the case or cases most similar to the current situation.

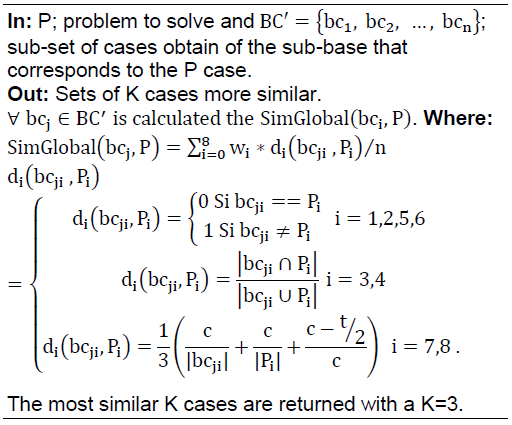

Global similarity is the result of the weighted sum of the distances between the value of each trait in a case and the value that it acquires in the problem case. This similarity is determined by equation 17. The distances are weighted considering the expert criterion, with a weight wi, the greater wi the greater the importance of the trait. The most important traits are the ontological ones:

where:

Local distance di= (xi, yi) is determined by the type of data xi, yi. In the case presented here there are three types of data for which different distance measures are used that are described below.

The traits NV, EB, CA and OP are symbolic single-valued type, the distance used is of Boolean type. The AT and Tables traits are of the set type. As a result of the tests performed on the system, the Jaccard distance is implemented which is based on the operations: between, set, union and intercept.

The OG and OE traits represent the general and spatial ontologies. The similarity measure that give the best results based on a control sample and a field test was the Jaro-Winkler distance Equation 18:

where l is the length of common prefix of the strings up to a maximum of 4 characters,

p is a constant scale factor that must not be greater than 0.25, otherwise the distance can attain values greater than 1 (0.1 is the standard value used).

bt is the threshold defined (0.7 is recommended) djaro is the Jaro distance between the strings xj,yj, which is defined by the equation 19:

The recoverer based on Algorithm 1 obtains the k cases closest to the inquiry requested by the user, using Equation 1 for the calculation of the distance. The result of the evaluation of the k system selected by default is 3. The set of cases obtained by Algorithm 1 constitutes the input of the adapter module.

Reuse and Adaptation

To adapt the case recovered, it is combined, together with the new one, by reusing information. The solution proposal is made through similarity mechanisms that define the closeness or not of the recovered case with the new one.

For the solution of the problem, a transformational analogy is followed that starts by transforming the solution of a previous problem into the solution of the new problem. Solutions are seen as states in a search space called T-space. T-operators describe the methods for moving from one state to another. Reasoning by analogy is reduced to looking at the T-space: starting with an old solution and using some method of analysis by difference (or other) where a solution to the current problem is found.

The input of the adaptation module is an initial solution of the three objective traits. This module allows to reuse and adapt based on transformational analogy, which implies structural changes in the solution. Transformational adaptation is guided by common sense in which the rules were defined and are used to direct the adaptation process. This process is considered to be a T-space, in which the known solution (SC) is going to be transformed with the use of T-operators (Table 6), until it becomes the solution of a new problem.

Table 6 T-Operators according to the feature Objective

| From trait | Where trait | CE trait |

|---|---|---|

| Coupling Insert |

Insertion of restriction |

Insertion of restriction |

| Coupling Removal |

Removal of restriction |

Removal of restriction |

| Replacing Coupling |

Substitution in restriction |

Substitution in restriction |

Each T-operator is defined by a set of rules that perform the operation indicated by it. These rules perform a chain work that allows inserting, eliminating or replacing part of the solution in order to adapt it to the needs of the current problem and that satisfies the restrictions imposed by the experts in domain ontologies and the natural requirements of objective traits.[60] The adaptation module has three stages that are described below.

In stage one the review of the three objective features has an algorithm of 25 rules that allow to check which features are absent, which are valid and which ones need to adapt.

In stage two, the set of rules to be applied is chosen according to the adaptation requirements of the previous stage in the following way:

— If there are no requirements, return the initial solution without adapting

— If there is a requirement for the From feature, the set of rules is applied to adapt the From trait.

— If there is a requirement for the Where feature, the rule set is applied to adapt the WHERE feature

— If there is a requirement for the CE trait, the set of rules is applied to adapt the CE trait.

In stage three a total of 65 adaptation rules are applied. The adaptation rules are divided into subsets by four methods.

The first and second methods contain the set of 24 rules that allow you to adapt the From feature. This requirement can be given by:

The initial result of the Trait is absent since the From is an empty string or

because

The solution does not contain the correct base table. The rules are responsible for finding the correct base table for the case and replacing it in the initial solution. The replacement of the base table in the solution can lead to the previous one being related to it and replacing it would be a coupling of the new base table with it. The application of rule seven eliminates this type of coupling.

In this case, tables would be left over in the result and it is necessary to eliminate the coupling to the base table.

After the base element is selected, the rest of the missing tables is added.

The third method contains the set of 27 rules that allow adapting the Where feature. These rules will be executed when the revision step establishes that the Where feature needs to be adapted. This requirement can be given by.

The initial result of the Trait is absent because it is an empty string and the case has restrictions:

— The solution does not have the correct OP.

— The solution does not have the correct AC.

— The solution is not given according to the general ontology of the SIGERE system in the output, phase, and type of correct installation.

The fourth method contains the set of 13 rules that allow the CE trait to be adapted. These rules will be executed when the revision step establishes that the CE trait needs to be adapted. This requirement can be given by the absence of some term of the spatial ontology in the query.

Retaining or Learning a New Case

After the review stage confirms that the adaptation is correct, it will be retained in the CDB that is enriched by the solutions to new problems.

Example of Cases

For a better understanding of the model, an example is presented where the request of the user in technical language would be: "Banks of transformers with a capacity greater than 15 kV".

When entering the search, it is passed to the technical language processor where the phonetic value of the words is calculated.

Later the semantic analysis is carried out and the different concepts are determined and based on them the fundamental tables and attributes.

The predictive features are identified as shown in table 7. With the data obtained, the input to the recuperator module is defined where the initial solution is obtained table 8.

Table 7 T-Operators according to the feature Objective

| Trait | Where trait |

|---|---|

| NV | Primario |

| EB | Banco de transformadores |

| AT | CodInst, Secc, Circuito, Cod, Cap, Nempresa |

| Tablas | Punto, BTransf, Cap, Transf |

| AC | Cap |

| OP | > |

| ON | BT ∩ T ∩ TPot ∩ Tcto |

| OE | P ∩ Prov |

Table 8 Initial solution

| Trait | Where trait |

|---|---|

| NV | Primario |

| EB | Banco de transformadores |

| AT | CodigoInst, Seccionalizador, Circuito, Cod, Volt, Nempresa |

| Tablas | Punto, BTransf, VoltSist, Transf |

| AC | Voltaje |

| OP | < |

| ON | BT ∩ T ∩ TPot ∩ Tcto |

| OE | P ∩ Prov |

| FROM | Transf INNER JOIN BTransf ON Transf.Cod =

BTransf.Cod INNER JOINPunto ON Transf.Cod = Punto.CodInst INNER JOIN VoltSist ON Transf.Id_VoltPrim = VoltSist.Id_VoltSist |

| WHERE | (VoltSist.Volt < @VC) |

| CE | (Mun.Nombre=@VC) and (Mun.Obj Contains

Postes_M .Obj ) and (poste =

Postes_M.CODIGO_POSTE) |

In this solution the predictive features AC and OP are not equal to the input features. Therefore, you must enter the adapter and go to stage 1, where the following rules are broken:

— Si Tabla = Tablas_Solucion, entonces True; sino False;

— Si (OP ⸦ SI), entonces True; sino False;

— Si (AC ⸦ SI), entonces True; sino False;

— In stage 2 where they should be executed:

— If there is a requirement for the From feature, the set of rules is applied to adapt the FROM trait.

— If there is a requirement for the Where feature, the rule set is applied to adapt the WHERE feature

In stage 3, the T-Operators needed to adapt the From traits and the Where trait are executed.

When applying the rules, we obtain the following adapted solution that we can see in the table 9.

Table 9 Adapted solution

| Trait | Where trait |

|---|---|

| NV | Primario |

| EB | Banco de transformadores |

| AT | CodInst, Secc, Circuito, Cod, Cap, Nempresa |

| Tablas | Punto, BTransf, Cap, Transf |

| AC | Cap |

| OP | > |

| ON | BT ∩ T ∩ TPot ∩ Tcto |

| OE | P ∩ Prov |

| FROM | Transf INNER JOIN BTransf ON Transf.Cod =

BTransf.Cod INNER JOIN Punto ON Transf.Cod = Punto.CodInst INNER JOIN Cap ON Transf.Id_Cap = Cap. Id_Cap |

| WHERE | (Cap.Cap > @VC) |

| CE | (Mun.Nombre=@VC) and (Mun.Obj Contains Postes_M .Obj ) and (poste = Postes_M.CODIGO_POSTE) |

This solution is valid. Given its low complexity, it is not stored in the cases base.

Other examples of querys implemented and incorporated to the case base are "Overloaded Transformers", "Disconnected out of service" and "See pending complaints".

4 Results

For the implementation of the case-based system, the SICUNE module (Spanish acronym for Intelligent Query System of the UNE) was developed within SIGOBE; which uses transformational analogy on previously made query, retrieved by a case-based reasoning, to respond the user's questions. During this process the system uses the facilities fo Java API Jxl to handle the case database.

To facilitate the organization, SICUNE was structured in packages as a mechanism to group elements. The Set, String and Position packages store work-related elements with sets, strings, and position similarity, respectively. The Structure and Useful packages store all the elements related to the access and handling of the case database, respectively. All these packages contribute to a Visual CBR package that stores the elements responsible for establishing the link between the interface and the application.

The system was designed with the possibility of adding new measures of similarity. Among the SIGOBE configuration parameters, it is possible to determine which similarity measures does the user of the system want to use; using the Jaro Winkler by default, for ontological features, with a 97% accuracy.

5 Experimental Study

To test the SICUNE, three departments of an Electric Company are selected that use information from different areas of the database and achieve greater coverage in the information contained.

The work of these areas is operational and needs the functionality proposed for their daily work:

As a first step, a study of the exploitation of SIGOBE v1.0 was carried out, which was the GIS they had installed, determining their approximate use (table 10).

Table 10 Queries to SIGOBE v1.0

| Queries | Use | Average monthly consul |

|---|---|---|

| Office | See the monolineales; Analyze the limits of

freeways; Address complaints |

100 |

| Engineering department | Analysis of the study area for the commission of

projects; Consultations on transformers |

25 |

| Customer Service area | State of Complaints; Status of projects and studies | 50 |

In all three areas, SICUNE tests are carried out for a period of one month for its validation. Table 11 shows the results by area.

Table 11 Results of SICUNE by area

| Queries | Total queries | Correctly prediction |

Incorrectly prediction |

Retain | %s |

|---|---|---|---|---|---|

| Office | 230 | 221 | 9 | 23 | 96,08% |

| Engineering department | 189 | 178 | 11 | 40 | 94,18% |

| Customer service area | 147 | 140 | 7 | 18 | 95,23 % |

The general results of the application of the SICUNE shows an effectiveness of 95.23%. In order to strengthen SICUNE, it is necessary to incorporate new cases, especially those related to the engineering area, due to the complexity of its work.

6 Conclusions

The following conclusions can be drawn:

— A case-based system on type problem solver was designed, using as an initial case database, the 265 static queries registered in SIGERE. The queries are described by eight data-type predictive traits and three objective traits. The case database responds to a three-level hierarchical organization, which favors the processes of access, recovery and learning of cases.

— The similarity between two cases was determined by the weighted sum of the distance of their traits.

— Calculation of the distance between traits was done according to its nature. It was determined that the best results in the study case are: for the traits of nominal type, the Boolean distance; for traits of set type, the Jaccard distance and the ontologies were treated as strings using the Jaro-Winkler distance.

— The case retention stage is in preliminary phase, since the current size of the case database does not presuppose reissues of cases, because it is still medium-sized.

— An intelligent real-time queries system is implemented for the UNE (SICUNE), achieving the generation of automatic queries that allow the system to respond to any type of queries in real time.

— The experimental study shows the feasibility of the proposal.

nueva página del texto (beta)

nueva página del texto (beta)