1 Introduction

Face detection is a task of computer vision that has been investigated during the last decades, which represents a challenge to the variability present in the digital images of human faces, either by specific factors of the scene (facial pose, expression and use of accessories); (lighting, sharpness, focal length, exposure and saturation) or attributes of digital images [1].

To perform face recognition in digital images it is necessary to find a pattern, present in all of them. Facial features such as eyes, nose and mouth are commonly considered. To find areas of the face where these facial features are found, segmentation techniques have been developed; filters for edge detection such as Gaussian filters [2] and landmarking techniques.

Fiducial points are represented as marks on the image, which indicate the location of points of interest: in facial images determine the location of eyes, nose and mouth, in addition to the curvature of the face. In addition to facial recognition, the detection of fiducial points is used in the analysis of facial expressions, considering standardized measures and angles. These measures and facial proportions are studied in Facial Anthropometry, defined as the measurement of the surface of the head and face [3]. This principle has been used in the forensic and medical field, especially in the area of plastic surgery. In 1994, facial measures corresponding to North American inhabitants were described to define statistically the facial proportions, which vary according to age [4].

On the other hand, it has been applied in the development of working tools for the industry, with the proper design of respirators, for the employees of a factory. In 2010, during an investigation, it was verified that 19 anthropometric measures were statistically different among adults over 45 years of age concerning workers between 19 and 29 years of age.

In addition, it was observed that there are differences in anthropometric measures between Asian, Caucasian, African, American and Hispanic people [5]. The number of fiducial points to locate is different depending on the type of application to be developed, or on the research focus. In the BoRMaN application, 20 fiducial points are detected [6], while in the proposal described in [7] there are 13 strategically chosen points, to find distances and angles among them.

The methods developed for the location of fiducial points can be divided into two categories: texture-based and shape-based ones. Texture-based methods model the surface around each fiducial point and include values in gray scales. This approach usually creates a window that traverses the areas of interest in the image. This method is considered slow, because the size of the window increases gradually [6].

In the shape-based methods, for each face image, we have a set of fiducial points. A large number of face images with their respective fiducial points are used in the training stage; as a result, a model that approximates, for a new image, the location of facial fiducial points, is obtained. On the last category, the ASM (Active Shape Model) algorithm [8, 9] which is chosen in the present work, as a method to detect fiducial points.

2 Proposed Method

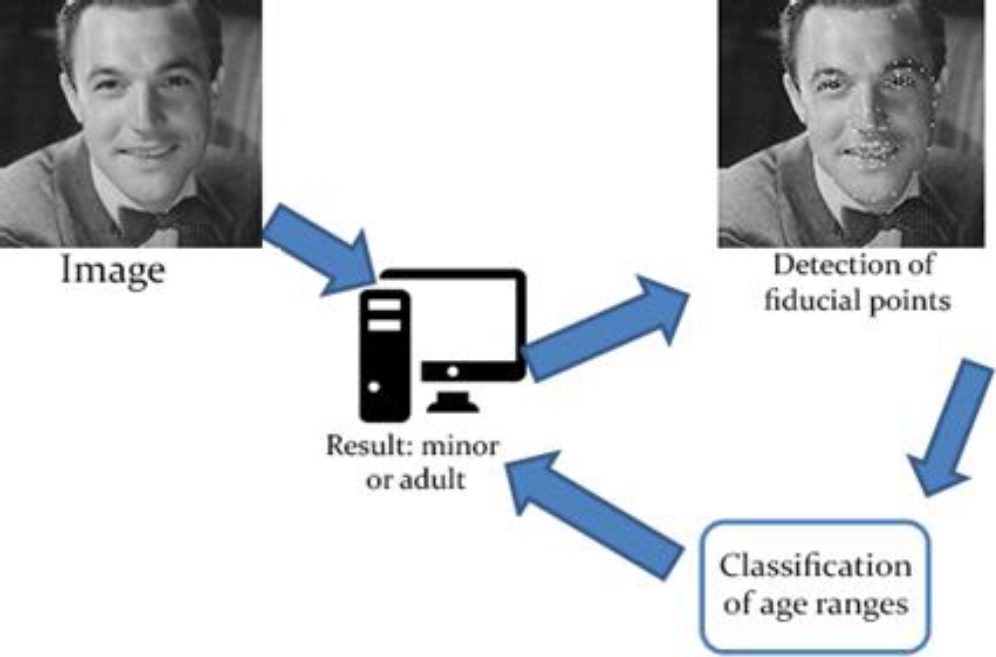

The proposed system consists of two parts shown in Fig 1: The first part consists in the detection of fiducial points by applying the ASM algorithm. As a result, a coordinate vector (x, y) will be obtained, indicating the location of the i-th point. In the second part, a classification in two age ranges, which involves a training stage using the Discriminant Analysis, and generates a classification between majors and minors, is made.

For this study, people over 18 years of age are considered adults, while minors are people with less than or equal to 17 years of age.

The IMDB-Wiki database [10], [11] consists of a set of images collected from the internet, in this work, only frontal images without facial expression and with dimensions greater than 123x123 pixels, were selected, given that, with smaller dimensions, the STASM library would not detect any face. Images from the FG-NET database [12] were also used as more images of minors were found.

2.1 Detection of Fiducial Points

Active Shape Model

The Active Shape Model is a shape-based model, proposed in 1995 [8]. It consists of having a previous model of the means to detect. Subsequently applying the previous model to a new image and modifying the positions for obtaining an adjustment between the model and the data of a new image. Two types of submodels are used to that end:The Descriptor Model for each landmark or fiducial point. On the training stage, this submodel, in which the areas surrounding each fiducial point, in terms of intensity levels are described, is constructed.

During the search, the areas around the proposed fiducial point are reviewed, and the position of the fiducial point is modified towards the zone that best fits the constructed submodel. The shape model. It defines the permitted means of fiducial points. It is constructed in the training stage, where an average form is obtained, considering a set of specimens that describe the form to be detected.

This model is responsible for verifying that the modifications completed by the descriptor model, are within an acceptable range, and that the form to be detected has not been distorted.

Years later, the implementation of ASM is performed by obtaining the STASM libraries [13], [14], where a multi-resolution search was integrated, namely, different sizes of the image to which ASM is applied, are obtained adjusting on each scale, the form obtaine. Likewise, the OpenCV libraries [15] for face detection are included.

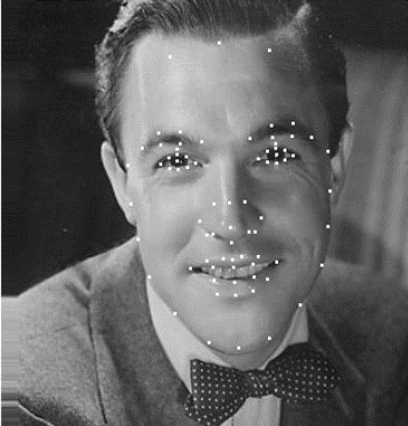

Some characteristics of the ASM application in this work are: detection in only two dimensions of digital images, a detection on frontal faces and with neutral expressions, i.e., that does not present any type of facial gesture. By default, STASM detects 76 fiducial points as shown in Fig. 2, delimiting the contour of the face, eyebrows, eyes, nose and mouth. From the above cited procedure, the vector of positions corresponding to the fiducial points is obtained.

2.2 Classification of Age Ranges

Fig. 3 shows the locations of fiducial points to be used, determined from the anthropometric measurements in [16, 17, 18, 23]. Said names and locations of the points correspond to studies of craniofacial analysis used in areas of plastic surgery.

The location of each fiducial point is known through the (x, y) coordinates obtained in the vector resulting from the ASM procedure, using the STASM libraries. To obtain the anthropometric parameters specified in Table 1, the Euclidean distance between two points is calculate:

Table 1 Anthropometric parameters of the face. Own source

| Anthropometric parameters |

| Binocular width (ex-ex) |

| Intercanthal width (en-en) |

| Width of the nose (al-al) |

| Width of the face (zy-zy) |

| Width of the mouth (ch-ch) |

| Width of the mandible (go-go) |

| Heigth of the face (n-gn) |

| Heigth of the nose (n-sn) |

For example, to obtain the distance 1: Binocular width (ex-ex) is substituted in equation 1 the coordinates of the points exocanthion right and left:

The same procedure is performed to obtain a vector of eight distances for each image. To analyze the data, the Discriminant Analysis procedure is used, consisting of the training and test stages. In the first stage, the Discriminant Analysis algorithm obtains the correlations between the obtained distances and the age group to which they belong, which allows to distinguish between two groups, in this case, between adults and minors. In the second stage, having obtained the correlations, an applicable model is constructed to classify a test element. To perform the classifier training, the Matlab classify function was used, which implements the Discriminant Analysis procedure.

3 Experimental Results

From the databases described above, 80% of the images were taken as training items and the rest as test.

Table 3 shows the means and standard deviations for each measured anthropometric parameter, and it verifies that there is a difference between adults and minors. For both age ranges, the intercanthal width and of the nose, have close values, since the points are located approximately at the same height in a vertical manner, (Previously the images were scaled, so that the proportion between height and width of each face will be preserved).

Table 2 Percentage comparison of confusion matrices. Own Source

| Minors | Adults | Minors | Adults | Minors | Adults | |

| Minors | 95 | 5 | 91.30 | 8.70 | 89 | 11 |

| Adults | 13.11 | 86.88 | 10.50 | 89.50 | 10 | 90 |

| Accuracy | 0.9071 | 0.904 | 0.0895 | |||

| A) ASM + Discriminant Analysis | B) LDA + Euclidean Distance | C)AAM + LDA + mínimum distance classifier | ||||

Table 3 Mean and standard deviation for each anthropometric parameter. Own source. SD stands for Standard Deviation

| Parameter | Adults | Minors |

| Mean ± SD | Mean ± SD | |

| Binocular width ex-ex | 127 .127±6.48 | 127.533 ± 6.195 |

| Intercanthal width en-en | 57.289± 3.612 | 56.66 ± 3.721 |

| Width of the nose al-al | 59.123 ± 4.653 | 59.448 ± 4.972 |

| Width of the face zy-zy | 197.818± 2.366 | 196.714 ± 2.662 |

| Width of the mouth ch-ch | 79.378 ± 8.539 | 78.573 ± 10.01 |

| Width of the mandible go-go | 128.486± 8.874 | 126.868 ± 8.667 |

| Heigth of the face n-gn | 165.759± 9.479 | 164.506 ± 10.333 |

| Heigth of the nose n-sn | 69.855± 5.693 | 68.405 ± 6.110 |

In Table 2, results are compared with those of previous research. The percentage of accuracy and false positives in the classifications of adults and minors, as well as the percentages of effectiveness, are represented.

The tests performed in B and C includes the FG_NET database. In the current proposal, better results are obtained in the classification of minors, with 95% success, reducing the percentage of false positives by 3%, compared to the proposal where LDA (Linear Discriminant Analysis), and the Euclidean distance are used as a criterion for classifying [19], and in 7% compared to the third study mentioned; where using AAM (Active Models of Appearance), LDA, and minimum distance as a method of classification is chosen [20].

In terms of accuracy obtained through the confusion matrix, a higher percentage of 90.7% is observed when compared to the other two works.

In order to obtain the characteristics of the face, in the previously compared works, there are methods used to reduce the dimensionality of the data, and to process only the most notable features of a face, given on intensity levels.

In another research carried out, like in the present proposal, distances between fiducial points are used, where an 81.18% of accuracy is obtained [21]; however, a threshold of 12 years is considered for classification, i.e., those older than 12 years, are considered adults. While when considering a threshold of 18 years, the percentage of accuracy is reduced to 76.81%.

Therefore, it stands out that the eight distances presented in the current proposal, allow distinguishing between two age groups: adults and minors, and that when considering angles between fiducial points, such percentages of success in the classification, could be improved.

4 Conclusions

In this work, a procedure to classify faces of people in digital images to differentiate between adults and minors has been proposed.

The importance of using a fiducial point detector, with a low percentage of false positives, lies in its contribution to obtain more accurate distances, and to make a clear distinction between the two age groups.

The 90% accuracy in the classification reflects that there is a quantitative difference between the distances obtained in faces of adults and minors; considering 15 fiducial points and 8 distances that describe the binocular width, intercanthal width, width of the mouth, nose, face, jaw, height of face and nose. The locations of the fiducial points are based on craniofacial growth theories, thus applying studies from other areas such as Forensic Anthropometry and Medicine.

As a future work, it remains to be considered a robust fiducial point detection procedure to variations on the pose of the face, in addition to extending the procedure and analyzing profile faces, and making more specific classification, either in three or more age groups.

nueva página del texto (beta)

nueva página del texto (beta)