1 Introduction

The pandemic caused by COVID-19 unleashed a media frenzy that helped establish the alert on the part of the health sector to society, about prevention and self-care to minimize the risk of contagion.

However, part of this frenzy that began to circulate on the internet has not ceased to this day (overshadowed by the war between Ukraine and Russia, and the global economic crisis in force at the time of writing this article), through social networks , blogs, videos, newscasts and various information systems, which distorted the seriousness of the coronavirus and its variants, with content that attributed this disease as an urban myth, hoax, conspiracy orchestrated by governments of the great powers, world purge, a media to contract cancer or HIV, even as asymmetric warfare.

Because of the foregoing, it did not take long for rumors and hoaxes to surface through hashtags on social networks, blogs, memes, email messages, spam, video montages and photomontages of various kinds, catapulting misinformation through false news to such a level. that scientific information was compromised.

For example, associating the coronavirus with a simple flu, thus facilitating the contagion and rapid spread of the disease, with dire consequences of blurring the credibility of medical and scientific personnel [1]. Other facts related to fake news were the dissemination of erroneous medical information in the news and social networks [2], underestimating the impact of dissemination and care against COVID-19, added to publications asserting that young people and certain communities were immune under racist or xenophobic overtones, or that tropical countries should not worry because the problem was concentrated in those with seasons.

The most notorious case of spreading false news was carried out by the then President of the United States Donald Trump [3], who stated that the combination of hydroxychloroquine and azithromycin combated the coronavirus; the result was that many people believed it and consumed it, committing their lives in the process. In addition to this, the continuous messages of denial issued by this president through his Twitter account, minimizing the importance of taking prompt sanitary actions, unleashed such disinformation in the United States whose consequences were felt by all its inhabitants [4].

This behavior was joined by other political leaders in other countries, dismissing the pandemic to the point of not implementing biosafety and self-care measures, even not abiding by the restrictive measures necessary to stop the spread of the virus under questionable pseudoscientific arguments.

In accordance with the above, it is evident that disinformation has become a true virus, an infodemic, becoming the common denominator of the mass media, whose objective has been focused on generating sensationalism, mockery, disbelief and panic in the community, even inciting violence, hatred, and civil contempt. Added to this panorama is fraud, cyberpiracy, phishing and cyberattacks of various kinds that increased alarmingly during the pandemic and have become more pronounced in the post-pandemic.

2 Disinformation

The European Commission defines disinformation as "any form of false, inaccurate or misleading information, presented and promoted to cause public harm or for profit" [5]. Expanding this definition, disinformation is understood as that premeditated and sensational action that seeks to create instability in public opinion through disinformation rhetorical artifices such as the demand for disapproval, the appeal to authority and fear, stereotypes, and euphemisms, to the manipulation of emotions, to intentional imprecision, redefinition and revisionism that lead in many cases to extremism and identity theft.

Although misinformation is not new, the way it is used for its dissemination is, where Information and Communication Technologies (ICT) play a fundamental role in this regard. Digital resources have opened countless possibilities and opportunities for the transmission of information, for which freedom of expression in some contexts is distorted or misrepresented through stratagems without the common citizen often realizing it, making them believe lies or truths. half.

The problem lies in the fact that lies can lead to personal and/or popular beliefs, quickly establishing themselves in their social circles, thereby exponentially increasing misinformation, where the manipulation of public opinion affects both the image of people, institutions, or governments.

Terms such as "astroturfing, egging, trolls, catfish, click baiting or fake news were included in the warning lists of the media and social networking companies, drawing attention to the new digital-native misinformation" [6]. In the trajectory of the pandemic and post-pandemic, disinformation has been used as a method to create fear, chaos, and geopolitical and social instability, impregnated in many cases with virulent nationalism and populism, as is the case of authoritarian regimes such as China, Russia, and North Korea, among others, which manipulate social networks by controlling the news and messages of their citizens [7, 8].

Both misinformation and fake news have contributed to the emergence of cases of post-traumatic stress disorder [9]. The consequences of these disorders have not yet been measured in depth; however, significant repercussions are expected in the immediate future, because disinformation campaigns are not going to decrease, especially when their influence on public opinion is increasingly deep-rooted, added to the uncertainty about the control and definitive disappearance of the coronavirus in the medium and long term.

The problem of disinformation can reach such a harmful level causing irreparable damage to the real information, delegitimizing, or discrediting it, as well as those who support it. As a particular case, part of the proliferation of the coronavirus is attributed to disinformation, manifested through false news [10] combined with video montages, whose realism has made people believe that the information is legitimate, protected by rumors, decontextualization or pseudoscience, thereby promoting non-compliance with biosafety protocols, self-care, even refusing to get vaccinated.

These actions present different nuances about the behavior of people or communities in the face of a certain type of event, ranging from a simple joke to demonstrations against public, educational, sociocultural and health policies [11] to name a few. In addition, these actions can be carried out automatically using virtual robots (Bots) or by human activity (Trolls), whose purposes are more aimed at disseminating false information on a massive scale, because behind it there is a lucrative business largely sponsored. part by the political and industrial sector [12]. The tendency to use bots as disseminators of false information lies in their autonomy, interacting with thousands of computer systems retweeting messages or publications through the hashtag. In the case of trolls, apart from being created and published by human hands, they use bots to amplify their dissemination.

In general terms, disinformation is tainted by false, misleading, and deliberate informative rhetoric artifices mediated by a sensationalist and media component. The actors responsible for these artifices are motivated by different causes, where the veracity of the news is not part of the minimum journalistic and informative values and standards, whose connotations in many cases present complex sociocultural and political nuances marked in most cases by psychosocial factors.

2.1 Fake News

Fake news is considered sensationalist or bullying information content, the dissemination of which is carried out through various media such as social networks, television, radio, portals, blogs, and the written press, among others. Fake news is characterized by lacking serious scientific and journalistic support; therefore, they are considered pseudo-journalistic content.

This form of popular expression is not new, it has simply been accentuated thanks to the different media whose creation, as [13] points out, "follows passion, politics, dissemination and payment". To these four P's are added panic, populism, power, parody, partisanship, provocation, and pseudo-journalism, which are complementary elements to this complex scenario forming a dynamic interaction as summarized in the following figure:

Fake news seeks to influence the community under the premise of misinformation, spreading under different nuances leading to generating social controversy, multiplying public opinion, stirring up spirits and satirizing. Likewise, they can contain a strong ideological component, often loaded with hate sponsored by third parties whose interest is to generate social destabilization.

In the same way, false news seeks to spread rapidly through ICT in a relatively short time, causing great impact depending on the context and content. Thus, the question arises, why are fake news so influential? The reasons are diverse and complex in many cases, due in part to the fact that false news can be created simply with the intention of having fun impregnated with black humor in some cases, which seeks to distort the real news or build the information based on assumptions and/or rumors from unreliable sources, such is the case of blogs and social networks.

Also, this news is based on a construct planned by an individual or group, tending to discredit, delegitimize and/or ridicule a person, community, industry, institution, or government; The reasons are diverse, as [14] points out: "satire or parody, misleading content, impostor content, fabricated content, false connection, false context and manipulated content".

In the case of the pandemic, the false news was initially directed at the medical community and scientific personnel, issuing confusing and inaccurate information, and in some cases contradictory information, fed by the media that, in search of the scoop and sensationalism, published news. decontextualized based on interviews whose information ranged from alarming to optimistic.

In addition to this, the information from non-specialist personnel increased misinformation and, incidentally, fed false news, showing nuances of humor, discontent, and skepticism.

In this order of ideas, the health sector went to the point of dismissing the severity of the pandemic, interpreting it as a seasonal flu [15] or a disease typical of Asia. In this context, satires and parodies alluding to the coronavirus began, rapidly escalating to racial, misogynistic, xenophobic, and discriminatory stigma.

Parallel to this, the newscasts were not far behind, publishing that the virus was of artificial origin, weaving a misinformation ruse about conspiracies of all kinds and denial orchestrated by the WHO, Bill Gates and his Foundation, the World Bank, the Chinese government, universities, and research laboratories among others.

Otherwise, public figures and politicians fanned the flame of disinformation, neglecting sanitary measures, calling them unnecessary and ridiculous. The truth of all this was the seriousness and impact that the dissemination of false information brought with it, with the potential irreparable social and health damage in many cases.

2.2 Deepfake

The Deepfake (fake + deep learning, falsification using deep learning), has become a social-technological trend used to spread false information through video montages tricked with artificial intelligence (AI). A deepfake seeks to ridicule, defame, or distort real news regarding public figures, such as actors or politicians and to a lesser degree ordinary people, creating pornographic videos, satirizing campaigns, or interviews, among other actions, manipulating images and/or audio.

A particularity of a deepfake is its degree of realism that makes it more difficult to differentiate from a real video; this thanks to artificial intelligence that uses machine learning and deep learning algorithms under different development platforms such as generative adversarial networks (GAN) [16].

With GANs it is possible to obtain completely fictitious images, which allow launching disinformation campaigns and falsifying the profiles of certain specific targets on social networks in a short time. An example of a system that uses GAN is StyleCLIP [17, 18], which supports text-based image manipulation intuitively.

There are the programs that exchange faces or deep video portraits, which are the most common to apply deepfake, such is the case of the mobile applications FaceJoy, FacePlay and Reface; The first allows you to place a person's face in a fixed image, the second uses video templates to insert the face in them and modify them to see how it would look in other nationalities, and the third does the same as the previous ones, with the difference that You can insert a video in different contexts creating high quality montages.

Also, there is FaceMagic that allows you to create videos, photos, and gifs by modifying the face(s) instantly in different situations. Now, if you want to go much further by violating people's privacy, there is the DeepNudes web application, which with any image of a dressed woman – the suit doesn't matter – the system recreates her by removing the clothes, leaving her completely naked. The result is so realistic that it is difficult to differentiate that it is a false image [19].

The deepfake uses datasets, with images and audio related to the victim. The central idea is to train a neural network that mimics facial expressions, gestures, voice, and inflections with a high degree of accuracy, which are then simulated by inputting fake content. The problem with this technological development is that it can eventually be used for extortion, scams, and defamation. Faced with this fact, the authorities are increasingly concerned about the manipulation of information that can be used by anyone with minimal knowledge of video editing and specialized software management.

With the technology available and in progress, video montages will increase in the coming years, because not only ordinary people will be able to do it with minimal effort, but also groups that seek to generate political and social instability. Likewise, the generation of critical disinformation environments is expected, where political groups will use this type of resource to discredit their competition.

This leads to refining new techniques that not only improve lip synchronization, but also create facial maps with the help of AI of such quality that it will be very difficult to establish whether a video is fake. This scenario has made it a particular case that the US, through the Center for a New American Security (CNAS) organization [20], has repeatedly stated that the deepfake will become a potential threat to national security.

They could even interfere in future elections, an aspect that can be replicated for any country. The CNAS statement should not be taken lightly since the impact and damage that the deepfake is causing could even reach the point of creating disinformation wars.

The truth of all this is that it will demand new technological developments in the coming years that will allow it to go hand in hand with the technologies used in the deepfake. In this way, it entails a potential danger in a society that has been marked by hatred and resentment, since, by creating false information with political, religious, racist, conspiratorial epithets, etc., they can trigger conflicts of various kinds, very difficult. to control and deny.

A few years ago, the technology used to create the deepfake presented problems related to guaranteeing a credible reality in certain contexts, for example, a person's face or unnatural blinking at certain times. With artificial intelligence called DALL-E 2, DALL-E Mini, Gato, among others, these problems have already been solved, since these systems can generate photorealistic faces. These systems already have access to the public with certain restrictions, which in the future will most likely allow the faces of celebrities or public figures to be uploaded and manipulate the media through them.

The deepfake is a resource that can polarize information in a way equivalent to fake news, with the mitigation that video uses as the main resource, this being more persuasive during the era of disinformation.

The consequence of all this is that in times of pandemic and post-pandemic ICTs and emerging technologies such as artificial intelligence showed their negative side, unleashing waves of misinformation whose social impact has been significant to date, calling into question decisions and actions of the health, scientific and government authorities in matters of health, prevention, and vaccination.

Thus, both false news and the deepfake have become key resources for promoting disinformation on a large scale, producing uncertainty in society about what is credible and what is not. Its repercussions are notorious in various fields, being literally used as a cybernetic weapon of disinformation by certain communities and countries, which invest large amounts of technical, technological, and economic resources to manipulate public opinion.

2.3 Consequences of Misinformation

Disinformation in the health environment has been an aggravating factor since the start of the pandemic, continuing even in the post-pandemic period, manifested in different ways such as: propaganda, malicious intentional influence, conspiracy, stigma, politics, rumors, jokes, and even cyberattacks. Each of these forms act in a more direct and harmful way than another, in such a way that their social impact is relative in terms of diffusion and permanence.

For example, cybercriminals used disinformation [21] to create panic and fear during the COVID-19 outbreak, with the aim of launching social engineering, phishing, and malware distribution attacks to deceive and steal personal information and information. of hospital institutions and research centers. Also, these same strategies have been used by ordinary people to attack certain companies to hijack information through ransomware.

Due to the media impact that misinformation brings, it is difficult for it to disappear so easily; This is partly due to the human being's own culture of disclosing information, even if it is false, thereby expressing their feelings and disagreement with society.

Likewise, the media together with other ICT resources have been fundamental tools in terms of information concerning the health sector. However, it is these same means that have been used against them, overwhelming and confusing society with false or erroneous information, influencing public and political figures to such a degree that they have contributed to people's opinion being biased.

The good faith of those who trust information that is supposed to be from a reliable source is literally abused, the consequences of which have been to underestimate the risks of the coronavirus in terms of contagion [22], dissemination, and death.

Likewise, under the misinformation, thousands of people were encouraged to abuse the consumption of vitamins and the intake of toxic liquids such as methanol, chlorine dioxide and sodium chlorite, among others, which supposedly treated the coronavirus.

Information was even published stating that the use of masks was correlated with cancer and that they were of no use in stopping contagion and, cases where the consumption of cow dung and other animals was recommended under the belief that it would cure them or would make immune.

Based on the horde of false news and disinformation spread everywhere, the authorities in the world chose to implement measures to refute them through official channels. However, the results have been unsuccessful so far due to the speed with which misinformation spreads, thus making its treatment complex.

In fact, it is possible that in the coming years there will be an increase in disinformation with geopolitical and conspiratorial nuances, marked by accusations against China and the WHO of the way in which the coronavirus emerged and its treatment in times of pandemic and post-pandemic, added to other sociocultural problems such as the increase in discrimination and xenophobia, among others.

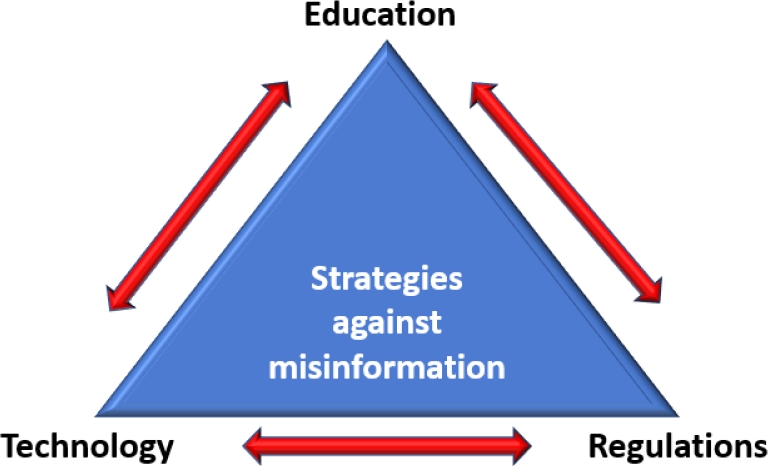

From the foregoing it can be deduced that coordinated measures are required between the health sector, the media, governments, and international institutions, which monitor the information that circulates through different channels, guaranteeing its veracity and in the process curbing disinformation through three strategies, as shown in Figure 2:

1 Education: society needs to be educated in the management of real information, teaching it how to discard that which is not real. For this, online sources are available to confirm the information and educational technologies that can be quickly developed and implemented in various environments. In the same way, consider the ethics of information (fact-checking) leading to evaluating the overexposure of data online and discerning its value.

2 Technology: using various technological resources such as AI and the blockchain [23], they allow the veracity of a news item to be verified under certain parameters, which if not exceeded are deleted from the network. Also, the use of certificates such as AMP (Authentication of Media via Provenance) is proposed, which validate the information both from the device with which the image was captured or recorded a video, to the editing tools used by professionals and companies.

3 Regulations: social networks are one of the means of communication on which the greatest responsibility falls for acting as a means of disseminating false news, therefore, the existence of a regulatory control that restricts this procedure without going against the right to free expression is necessary.

These strategies are viable if they are coordinated and implemented in a timely manner using the technology at hand. In this regard, there are official companies whose job it is to create synthetic data that allow training deep learning models leading to avoiding difficulties in obtaining real data on humans.

Part of this type of development presents some variants that are the use of antagonistic generative networks (GAN); which allow the creation of high-quality fake images and videos, where superimposing the faces of celebrities or politicians on porn stars is relatively easy [24], including superimposing biometric data. This panorama leads one to think that in a relatively short time being able to discern what is real or false will be in the hands of AI, which is a major social and judicial problem, among other factors.

Faced with the above, the use of C2PA certificates (Coalition for the Origin and Authenticity of Content) [25] is proposed as an industry standard, whose owner is Adobe, guaranteeing consumers when they consult a website that the Graphic information such as photos and videos is true, although the download cannot be avoided to be manipulated, at least traceability of its original source can be carried out and a deepfake can be denied.

It should be clarified about the C2PA, that this "does not say anything about the veracity of what is represented in an image, it indicates when something was done and who did it: when it was produced, how it was edited, published and reached the consumer" [26], all through a certified watermark of the metadata. What is expected in the short term is that the manufacturers of cameras and smartphones adopt C2PA, then gradually escalate the staff that create the content and the chain of services so that they do so as well, as is the case of social networks.

Other alternatives related to C2PA is Project Origin [27], which is an alliance of companies such as the BBC, the New York Times, the CBC/Radio-Canada, Microsoft and Spotify, among others, that use a development of this last company called Authentication of means of origin; This seeks to verify the publications of images, videos and audio, through the analysis of metadata and cryptographic hashes of each file, which when manipulated automatically changes its digital footprint. These alternatives are not available to the public since information would be given to the creators of fake news tools to counteract them.

2.4 Infodemic and Health Implications

Fake news in times of pandemic and later in vaccination campaigns, caused an exponential growth of misinformation, a phenomenon that is summarized in the term infodemic [28]. The overwhelming nature of this situation, despite the number of infected and deaths, the infodemic has affected the credibility and trust of society in the face of health institutions and their members, as well as the prevention and vaccination policies proffered by governments.

The degree of affectation has been such that the information circulating on the internet questions the effectiveness of the vaccines, encouraging people not to get vaccinated under the argument that it is a massive purge, or that its objective is to sterilize the population and thereby stop the explosion. demographic among other nonsense statements, in addition to the instigation not to wear a mask, to consume unapproved alternative medications, alcoholic beverages and concoctions, among hundreds of other hoaxes.

Another topic of the infodemic is related to publications, where authors have used the relationship between COVID-19 and the immune system as a hook to promote articles or books whose scientific support is debatable, such has been the case of ancestral medicine texts, which hypothetically prevent and they treat the coronavirus, making people believe that they are the salvation table for their ills. These types of actions are considered elaborate fake news, which in many cases is supported by rulers and even by unqualified medical personnel.

It is difficult for the citizen in general to decant the rain of misinformation that they constantly receive and that can eventually become dangerous, thus dismissing the scientific studies and medical care that has led an infected individual to focus their efforts on herbs and home remedies. exposing not only your life, but those around you, becoming a potential spread vector for your community. These actions are possibly reprehensible; however, the direct blame falls on those who publish false information without measuring the consequences of their actions, taking advantage of the chaos, the despair of people and society in general.

With the technological resources available to society, the proliferation of the infodemic has worsened, this due to the increase in internet connectivity and access to various free or low-cost digital resources, which allow the editing and manipulation of images, texts, and audios with total freedom. Consequently, the publication and dissemination of false information has various channels, most of which have proven to be impossible to control forcefully.

As a particular case, social networks have proven to be extremely difficult to regulate, which have become a focus of violence and digital intolerance, despite unsuccessful attempts to prohibit and eliminate such actions. The problem is further exacerbated when influencers manipulate their audience in an irresponsible way without considering the social damage that their actions cause. Additionally, with AI and machine learning applied to obtain geospatial data, huge amounts of information can be extracted, which a few years ago access was exclusive to intelligence agencies, thus allowing them to be manipulated at their convenience. who have the technical and technological resources for this purpose.

3 Discussion

Can you trust what you are seeing through the different media? The answer to this question is complex, false news disseminated through the media has repeatedly become a social evil, causing, apart from misinformation, problems of a diverse sociocultural and geopolitical nature, significantly affecting the health sector. Worldwide. For example, a false news item that had a high social impact was that of then-President Donald Trump, who stated that by ingesting bleach he could fight the coronavirus in a very short time.

The result of this news was that several people were hospitalized for ingesting detergent. This publication generated strong controversy and rejection from the medical community, which was later corrected not by Trump but by the New York Department of Health. Similarly, leaders of other countries underestimated contagion and self-care, whose example was seconded by the population with consequences already known by public opinion.

The foregoing highlights the effects of politics in the era of misinformation, which has not gone unnoticed regarding the issue of vaccination, questioning its health function, arguing that the time required for testing and obtaining the vaccine per it is doubtful, or that they are genetic markers equivalent to those used in cattle, among other unsubstantiated assertions.

The tragedy of this situation is the presence of personal, economic, and geopolitical interests. In addition to this, there are organized groups on the Internet whose purpose is to promote violent and intolerant hate messages, which are difficult to eradicate from the web due to their great recovery capacity [29].

Misinformation and false news have dire consequences for the credibility of society, added to the probability that it will worsen in the coming years; this is partly due to the technological evolution itself that facilitates the proliferation of digital tools such as portals that allow the creation of false news, or AI-based systems using algorithms and bots, which ironically can be used to counter disinformation by filtering it.

In the case of AI, there are advanced platforms available to the public such as GPT-3 and GPT-4 (Generative Pre-trained Transformer), which theoretically can indirectly create fake news (manipulating it with counterintuitive rhetorical arguments) and cyber-hooks or clickbaits [30] of such a degree of quality that it will be difficult to know if they are real or not.

Similarly, there is the BLOOM system (BigScience Large Open-science Open-access Multilingual Language Model) [31], much more advanced than GPT-3, capable of generating text in 59 languages, and the recent ChatGPT based on GPT- 3. These tools present unprecedented potential in technological terms, raising the debate about their social implications if they are manipulated to create false information.

On top of GPT-4, made up of approximately 100 trillion parameters as opposed to its GPT-3 counterpart of 175 billion parameters, it makes for a robust system that allows you to run generative algorithms with the ability to create realistic information and videos of virtually anything. In the case of fake news, it is only a matter of time before false information about anyone can be created with few images.

This leads to elucidate that, with the continuous advancement of AI and the creation of a public database of faces, it will be possible to create the image of a fictitious person and from there assemble a whole paraphernalia on it with the aim of using it in acts. illicit Although it seems science fiction, the possibility is given, and the worst of all this is that it will be very difficult to differentiate if this "avatar" is human or not.

It can be affirmed that before disseminating unreliable information, it is essential to previously confirm its veracity, comparing it with credible sources. In the same way, assume a critical role when consulting information and questioning what is read, for this, it is suggested to consult web portals that help to deny false news such as: WHO, Verified (ONU), European Center for Prevention and Control of diseases, 1de2.edu, Truly media, FactCheck.org, Botometer, Snopes, Hoaxy, Fighting disinformation, Credibility Coalition (CredCo), Google News Initiative, Knight Foundation, W3C Credible Web Community Group, etc. As a particular case, the WHO has established campaigns aimed at identifying and reporting incorrect or misleading information that is potentially harmful in times of pandemic and post- pandemic.

Regarding the role of the media, it is necessary to be focused on maintaining the credibility, honesty, transparency, and trust of the news disclosed to the community, where the work of providing verifiable information to those who need it must be present. Information verification or fact-checker [32] does not conflict with freedom of the press, in fact, there are online resources that allow this task to be carried out quickly and easily.

However, although these resources partially counter disinformation and false news, it is necessary to formulate regulations that allow a brake on its different modalities and contexts, in a similar way to what was done by Singapore [33], but without going to the ends.

There are initiatives in this direction, but there is still a lack of technical and technological tools to facilitate this work, such as content verification systems, designed under international standards that provide security without violating freedom of expression. This task falls not only on the legislative and technological entity, but on the community in general, where respect for others prevails without violating fundamental rights.

As additional information, to verify the validity of a piece of news and counter misinformation, it is recommended:

1 Extrapolate from the news prejudiced aspects marked by hate speech, racist, misogynist, sexist, xenophobic and discriminatory.

2 State entities must appropriate the problem by removing from the web those networks or groups that promote hate and misinformation. Similarly, create specialized groups that counteract the activities of those who promote misinformation and intolerance.

3 Penalize and prosecute those who create and spread false news. It is important to note in this regard that there are criminal laws, whether for those who publicly encourage, promote, or directly or indirectly incite hatred, hostility, discrimination, violence, and crimes of genocide against humanity, among others, through any technological means. The reasons are the order of the day, since these range from racism, anti-Semitism, through personal or group ideology, religion, or beliefs, to situations of a family, ethnic, race or national nature.

4 Combine efforts between government entities, researchers, and technology companies, leading to developing coordinated technical and technological strategies that allow counteracting disinformation and all kinds of actions that violate human rights.

5 Promote research leading to disseminate science more assertively to the public. [34] cites Caulfied (2020), who states that "researchers need to have a greater presence on social networks and disseminate science in the same symbolic battlefields that are currently occupied by disinformation".

6 For society in general, try to be as objective as possible without intervening emotions. This leads to thinking twice before forwarding information to third parties without having verified its source.

7 Compare the information with reliable sources: indexed articles, renowned newspapers, and news, antifake news web portals, etc.

8 Look at the publication date of the news, use online image and video verifiers, because it often happens that information is recycled to support false news.

9 Collaboration of various organizations such as the WHO, Google, Tencent, Twitter, Meta, Pinterest, TikTok and YouTube, among others, to focus efforts on denying hoaxes and demystifying false information.

10 Transparent dissemination of reliable scientific data by governments, publishing them proactively under laws and policies concerning the right to information.

11 Expand the Services provided by platforms such as the UN's "Verified" (https://shareverified.com/es//uri]), whose objective is focused on providing reliable and accurate information about the coronavirus among other aspects of social interest.

12 Promote initiatives such as Eleuther AI [35] characterized by its research on the interpretation, security, and ethics of large AI-based language models, which can be used for criminal purposes such as the creation and dissemination of false news or deepfake.

13 There are technical solutions using AI through machine learning [36], deep learning and natural language processing using methods such as logistic regression, the Naive-Bayes multinomial model and support vector classifiers (SVM) pretty good at dealing with the topic at hand. For example, there are already automated detection systems that filter false information and reinforce what is real. In this same direction, AI is used to eliminate bots that can be used to carry out distributed denial of service (DDoS) attacks [37].

As a complement to the above, Google has become a tool that allows you to verify if an image is false or not. The procedure is simple, just look at a fact verification label that appears at the bottom of the image results. When selecting this label, a summary is displayed that shows the authenticity of the image through the verification of the web page where it is published.

Intel has also created the AI-based Deepfake Detection System Fake Carcher that detects in real time if a video is fake, with an accuracy rate close to 100%, through the analysis of subtle variations in the color of the pixels. related to blood flow signals from a person's face.

As can be seen, the role of AI in disinformation is a fact, it only remains to polish certain algorithms so that automatic systems are programmed to such a level that it is difficult to distinguish between real and false information. In the case of fake news, thanks to advances in AI, particularly algorithms related to natural language (NLP) [38] such as the one used in the GPT-3 and 4 system [39], could be used for this purpose with a high degree of credibility, even supported by reliable sources created or decontextualized.

GPT-4 is not the only most powerful AI tool at the time of writing, as there is another similar AI, called Google Switch Transformer with 1.6 billion parameters and WuDao 2.0 (China), which is 10 times more complex than the GPT-3. Another type of AI that has aroused interest due to its release of code and free access is OPT (Open Pretrained Transformer) from the Meta company, which theoretically will only be used exclusively for research, however, questions are raised about its potential generation of false information. and racist language, just like LaMDA; an AI model for dialog applications.

Thus, AI can have a great social impact, such is the case of geopolitics, where the manipulation of information and censorship can undermine credibility, for example, the war between Ukraine and Russia [40], or the pandemic spread of Covid variants in China. It is no longer a secret that AI allows promoting false news, and at the same time, there are other AIs that allow evaluating the veracity of the information, examples of this are: Fake News Challenge, Spike, Snopes, Hoaxy and Deep Entity Classification (DEC), among other.

The world faces an uncertain future scenario in terms of massive false information, which leads us to think that society will be subject to perceiving information in a fractional way, with the risk of assuming radical positions that possibly lead it to make bad decisions, under polarized versions and strategic propaganda aimed at destabilizing the sociocultural and geopolitical construct, among others. The challenge of fighting against disinformation is ongoing, demanding that measures be taken to guarantee that freedom of expression is not misrepresented and leads society to greater disinformation chaos with the corresponding consequences.

Similarly, in health matters about demystifying what circulates through the networks and other channels about vaccines against Covid-19, [41] points out "that the authorities must be very clear about what is known and what is not it is known, involve the public in the debates, take their opinions seriously and build trust through transparency”, this action will undoubtedly contribute to reducing uncertainty in society.

Added to the above is the massive manipulation of information, which has proven to be very dangerous because it moves large masses of people. In a localized way, fake news is being used as an attack on corporate targets, where a meeting can be silently recorded and the information then manipulated to incorporate false and/or damning data that, if leaked, would create commercial shock, thereby compelling to the company paying for not publishing.

Disinformation is not only concentrated in the written word and video, but also in sound, such is the case of an AI belonging to the company Sonatic [42], whose development uses the voices of artists, this focused on the field of entertainment. However, this same technology can be used to generate voices for deceptive purposes.

A particularity of this AI is that it can be configured so that the voice adjusts to a tone of fear, sadness, anger, happiness, and joy, added to the fact that it can express ironic tones, shyness and even boast. As if that were not enough, the voice can be modulated in such a way that certain voice patterns such as tone, intensity and vocalization can be modified, giving the listener the sensation of a smiling tone, accompanied by breaths in an equivalent way to what he does a human being.

4 Conclusions

Disinformation in the health sector in times of pandemic and post-pandemic created all kinds of social and geopolitical tensions that have demanded regulatory, technical, and technological actions to mitigate it. The problem may become more acute in the coming years, due in part to the continuous advances in AI that will make it more difficult to unmask false news or false videos, added to multisectoral cyberattacks promoted by criminal gangs and the business that revolves around the dissemination of false information. deliberate misinformation.

Fake news will not end unless there are policies that punish those who promote misinformation. However, fake news is another matter, as it is a disruptive movement that brings with it profound implications in the various tabloid and corporate media, based on the technology used to create it. This brings consequently, that the journalistic and judicial evidence with which it is currently judged based on audio and video will be refuted, because they cannot be undeniable evidence of a fact.

As if this were not enough, in this context, AI is going to assume a technological role that debilitates justice. However, alternatives are presented that allow validating the authenticity of audio and video files such as the use of the blockchain.

The problem is in the technological implementation with the corresponding costs, plus the deception technologies advance much faster than those to counteract them.

Disinformation and the flow of false news issued by the different communication media must be considered as a problem and risk of high social, geopolitical, health and security impact, and as such the legislation must act accordingly to minimize them.

The reason lies in the fact that the information that society receives is often out of context and/or distorted, whose reflection on its veracity and reliability is debatable. This scenario has the effect that many people are susceptible to being influenced by misinformation, since in one way or another they confirm their beliefs, which are reinforced by becoming recurrent in different media until they become convincing, completely distorting the truth.

Fake news spreads much faster than the real ones [43], therefore, it is advisable to be aware of those that arrive by different means and before forwarding them to third parties, consider the implications in terms of violating the honor, image and good name of a person, community, or institution.

nueva página del texto (beta)

nueva página del texto (beta)